Are you ready to dive into the world of building custom embedded Linux systems? This article will guide you through the process, from selecting hardware to configuring the software, so you can create your own specialized devices.

Microcontroller vs Microprocessor Differences

When building embedded Linux systems, it’s important to understand the differences between a microcontroller and a microprocessor.

Microcontrollers are typically used in embedded systems, featuring integrated memory, peripherals, and a CPU on a single chip. Microprocessors, on the other hand, are more powerful processors used in general-purpose computing devices.

Microcontrollers are often used in applications such as consumer electronics, IoT devices, and industrial automation, while microprocessors are found in computers, servers, and data centers.

Understanding the differences between these two types of processors is crucial when designing and developing embedded Linux systems.

When working with Linux on embedded systems, it’s important to choose the right processor based on the requirements of the project.

Microcontrollers are typically used in applications where cost, power consumption, and size are critical factors, while microprocessors are used in applications that require more processing power and advanced features.

Why Use Linux in Embedded Systems?

Using **Linux** in **embedded systems** offers a wide range of benefits that make it an attractive choice for developers.

**Linux** provides a **flexible and customizable** platform that can be tailored to meet the specific requirements of embedded systems. This allows developers to create **highly optimized** systems that are perfectly suited for their intended use.

Additionally, **Linux** is an **open-source** operating system, which means that developers have access to the **source code** and can modify it to suit their needs. This level of **customization** is particularly important in embedded systems, where every component must be carefully optimized for performance and efficiency.

Furthermore, **Linux** has a **large and active community** of developers who are constantly working to improve the operating system and add new features. This means that developers can rely on a **wealth of resources** and **support** when building embedded systems with **Linux**.

Networking & Interoperability

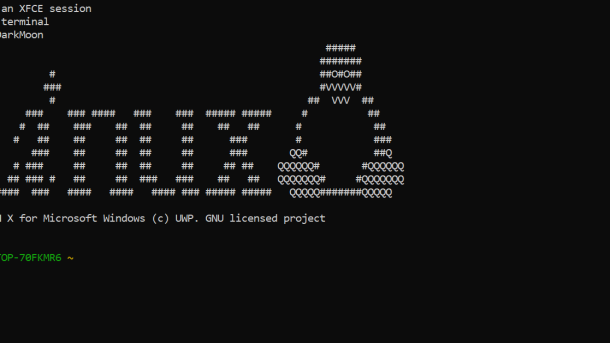

One key aspect of networking in embedded systems is the ability to connect to the internet, enabling devices to access remote resources and services. Whether it’s for firmware updates, data retrieval, or remote monitoring, having a reliable network connection is paramount. **Ubuntu** is a popular Linux distribution known for its robust networking capabilities, making it a suitable choice for embedded systems development.

In terms of interoperability, it is crucial to ensure that **device drivers** are compatible with the hardware being used. This involves understanding the underlying processor design, such as **ARM Cortex-M** or **ARM9**, and how it interacts with the kernel and other system components. By leveraging open source libraries and source code, developers can create custom drivers that seamlessly integrate with the Linux operating system.

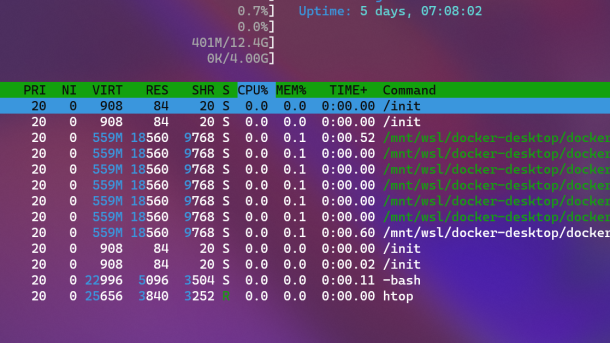

Additionally, deploying embedded Linux systems requires careful consideration of memory management, file systems, and booting mechanisms. Understanding how these components interact with the hardware is essential for ensuring optimal performance and reliability. By utilizing tools like the **RPM Package Manager** and **Docker**, developers can streamline the deployment process and automate software updates.

Security Considerations

When building embedded Linux systems, it is crucial to consider security measures to protect your device and data. One important aspect to focus on is securing the bootloader and kernel to prevent unauthorized access or modifications. Utilizing secure boot mechanisms and signing images can help ensure the integrity of your system.

Another key consideration is implementing proper access control measures to restrict privileges and permissions for users and applications. This includes setting up **firewalls** and utilizing **encryption** to safeguard communication channels and data storage.

Regularly updating your system with the latest security patches and fixes is essential to address any vulnerabilities that may arise. It is also recommended to disable unnecessary services and features to reduce the attack surface of your device.

When developing embedded Linux systems, it is important to follow best practices for secure coding and software design. This includes **sanitizing input**, validating user inputs, and implementing secure communication protocols.

Furthermore, incorporating security features such as secure bootloaders, **memory protection units (MPUs)**, and **hardware encryption** can enhance the overall security of your embedded Linux system.

By prioritizing security considerations during the development and deployment of embedded Linux systems, you can help mitigate potential risks and ensure the integrity and confidentiality of your device and data.

Filesystems & Databases

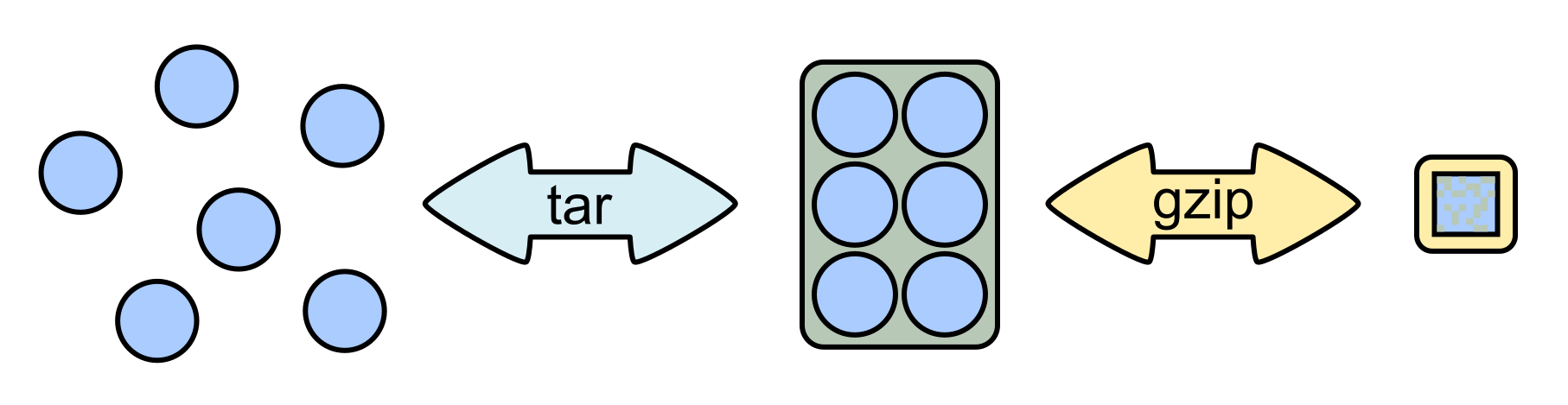

When building embedded Linux systems, understanding filesystems and databases is crucial. Filesystems are responsible for organizing and storing data on the device, while databases manage the structured data within the system.

A common choice for filesystems in embedded Linux systems is ext4, known for its reliability and performance. Databases like SQLite are often used due to their lightweight nature and compatibility with embedded systems.

It’s important to consider the requirements of your specific project when choosing a filesystem and database. Factors such as data access speed, storage capacity, and system resources should all be taken into account.

Properly configuring and optimizing your filesystem and database can greatly impact the overall performance and efficiency of your embedded Linux system. Regular maintenance and monitoring are also essential to ensure smooth operation over time.

By familiarizing yourself with different filesystems and databases, you can make informed decisions when designing and implementing embedded Linux systems. This knowledge will help you create reliable and efficient systems tailored to your specific needs.

Multiple Processes Management

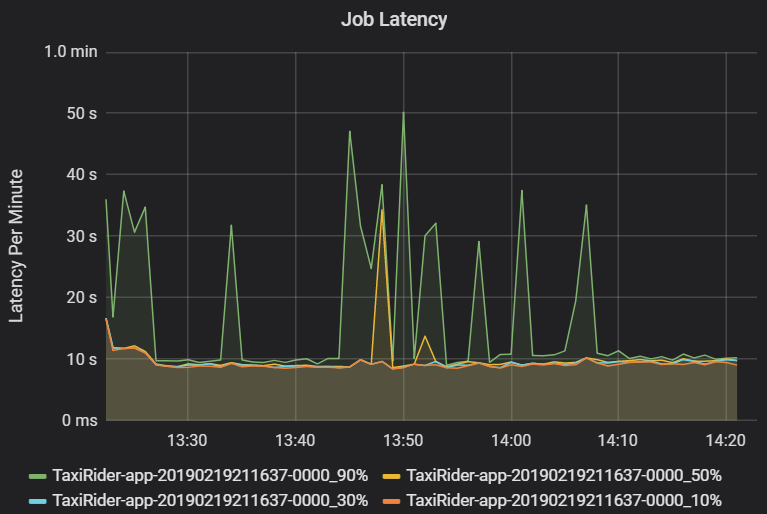

When working with embedded Linux systems, managing multiple processes efficiently is crucial. This involves overseeing various tasks simultaneously to ensure the system functions smoothly and effectively. Process management is a fundamental aspect of embedded Linux development, as it allows for optimal resource allocation and utilization.

One key consideration in multiple processes management is ensuring that each process has the necessary resources to operate effectively. This includes managing memory allocation, processor usage, and other system resources to prevent bottlenecks and ensure a seamless operation. Properly managing processes also involves handling dependencies between different tasks, as well as prioritizing critical processes to maintain system stability.

In embedded Linux systems, the kernel plays a crucial role in managing processes. The kernel is responsible for coordinating the execution of various tasks, handling system calls, and providing a secure environment for processes to run. Understanding how the kernel interacts with processes is essential for efficient process management in embedded systems.

Additionally, developers working on embedded Linux systems should be familiar with tools such as Yocto Project and OpenEmbedded for building custom Linux distributions tailored to specific hardware requirements. These tools provide comprehensive solutions for managing processes, configuring system components, and optimizing system performance in embedded applications.

By mastering the principles of multiple processes management in embedded Linux systems, developers can create robust and reliable systems for a wide range of applications, from consumer electronics to industrial automation. With the right knowledge and tools at their disposal, developers can harness the power of Linux to build cutting-edge embedded systems that meet the demands of today’s interconnected world.

Language and Library Support

Utilizing the RPM Package Manager can help manage software packages efficiently on your embedded system. Consider using Ubuntu as your base distribution for its user-friendly interface and wide community support.

Understanding processor design, such as the ARM Cortex-M or ARM architecture family, is essential for optimizing performance on your embedded system. Ensure that your system has the necessary memory management unit for efficient memory usage.

Booting your embedded Linux system correctly is key to its functionality. Familiarize yourself with the kernel and device drivers to ensure smooth operation. Utilize virtual environments like VirtualBox for testing and development.

Consider the deployment environment of your embedded system, whether it be in a data center, IoT device, or computer appliance. Pay attention to software bugs and runtime systems for a seamless user experience.

Incorporating open source tools and modular programming techniques can streamline development and maintenance of your embedded Linux system. Stay informed about the latest advancements in embedded Linux, such as real-time computing and multi-core processors.

By mastering language and library support for embedded Linux systems, you can create efficient and reliable devices for a variety of applications. Keep learning and exploring new technologies to stay ahead in the ever-evolving field of embedded computing.

Hardware Isolation from Software

One way to achieve hardware isolation is by utilizing **device drivers**. These drivers act as a bridge between the hardware and the operating system, allowing the software to communicate with the hardware in a standardized manner. By isolating the hardware in this way, developers can write software that interacts with the hardware without needing to understand the intricacies of the underlying components.

Another important aspect of hardware isolation is **memory management**. By partitioning the memory of a system and allocating specific regions for different tasks, developers can prevent software from accessing areas of memory that it shouldn’t. This helps to ensure the stability and security of the system while maintaining optimal performance.

Developer Availability and Cost Factors

Developer Availability and Cost Factors are crucial considerations when building embedded Linux systems.

Having skilled developers who are knowledgeable in Yocto Project and OpenEmbedded is essential for successful project execution. Developers should have experience in kernel customization, library integration, and memory management unit optimization.

The availability of developers with expertise in ARM architecture family and AVR microcontrollers can impact project timelines and costs significantly.

Consider the cost implications of hiring developers with experience in device driver development, runtime system configuration, and deployment environment setup.

Having a clear understanding of the deployment environment, file system requirements, and function of the system will help in estimating developer availability and cost factors accurately.

Ensure that developers are proficient in modular programming and have the necessary skills to troubleshoot software bugs efficiently.

By considering these factors, you can build embedded Linux systems effectively and efficiently, ultimately saving time and resources in the long run.

Design Workflow for Embedded Systems

When designing a workflow for embedded systems, it is important to first consider the specific requirements of the project. This includes determining the hardware components needed, the software architecture, and any other tools necessary for development.

Embedded Linux systems are popular due to their flexibility and open-source nature. They can be customized to meet the needs of a wide range of applications, from small devices to large-scale systems.

One key aspect of designing a workflow for embedded systems is selecting the appropriate kernel for the project. The kernel serves as the core of the operating system, managing hardware resources and providing essential functions for the system to run smoothly.

Next, developers need to consider the file system for the embedded system. The file system organizes and stores data on the device, ensuring that information is accessible and secure. Choosing the right file system is crucial for the overall performance and reliability of the system.

Raspberry Pi Considerations

When considering using a Raspberry Pi for your embedded Linux system, it’s important to think about the specific requirements of your project. Make sure to choose the right model of Raspberry Pi for the job, considering factors such as processing power, memory, and connectivity options.

Compatibility with any additional hardware or peripherals you plan to use is key. Ensure that the Raspberry Pi you select can support everything you need it to, whether that’s sensors, cameras, or other devices.

Keep in mind that power consumption may be a factor, especially if your embedded system will be running on battery power. Some Raspberry Pi models are more power-efficient than others, so choose accordingly to maximize the battery life of your device.

Consider the size and form factor of the Raspberry Pi, as well as any additional components you plan to add. Make sure that everything will fit together neatly and that the overall design is practical for your project’s needs.

If you’re new to working with Raspberry Pi or embedded Linux systems, consider taking a Linux training course to familiarize yourself with the basics. This will help you understand how to set up and configure your system effectively, as well as troubleshoot any issues that may arise.

Peripherals in Embedded Systems

Peripherals play a crucial role in embedded systems, providing the means to interact with the outside world. These components can include sensors, actuators, displays, and more, allowing the embedded system to sense and respond to its environment.

When building embedded Linux systems, it’s essential to understand how to interface with these peripherals effectively. This involves writing and configuring device drivers to communicate with the hardware, as well as utilizing the appropriate APIs or libraries to access the peripherals from the application software.

One common example of a peripheral in embedded systems is the General-Purpose Input/Output (GPIO), which allows for simple digital communication with external devices. Understanding how to configure and utilize GPIO pins is essential for many embedded projects.

In addition to GPIO, other peripherals such as I2C and SPI interfaces are commonly used in embedded systems to communicate with devices like sensors and displays. These interfaces require specific configurations and protocols to function correctly, which must be considered when designing the embedded system.

BGA Packages for Hardware Design

When designing hardware for embedded systems, BGA packages play a crucial role. **BGA packages** are often used for **ARM9** and **AVR microcontrollers**, providing a compact and efficient solution for your hardware design needs.

These packages offer a high pin count in a small form factor, making them ideal for space-constrained applications. When working with BGA packages, it is essential to pay attention to the layout and routing of your PCB to ensure signal integrity and reliability.

Integrating BGA packages into your hardware design requires a good understanding of the **kernel**, **device drivers**, and **application software** needed for your embedded Linux system. By learning how to work with these components, you can create a stable and efficient system for your project.

Taking Linux training courses can help you gain the knowledge and skills necessary to work with BGA packages and build embedded Linux systems effectively. With the right training, you can confidently tackle the challenges of designing hardware for the **Internet of Things** and other embedded applications.

By mastering the intricacies of BGA packages and Linux systems, you can unlock the full potential of your hardware design projects. Invest in your education and training to develop the expertise needed to succeed in the fast-paced world of embedded systems.

Non-Volatile Storage Options

Another common non-volatile storage option for embedded Linux systems is SD cards, which are cost-effective and widely available. SD cards are easy to interface with and can store large amounts of data, making them ideal for applications that require high storage capacity.

When choosing a non-volatile storage option for your embedded Linux system, consider factors such as storage capacity, speed, and durability. It’s also important to ensure compatibility with your hardware and operating system to avoid any compatibility issues.

In addition to flash memory and SD cards, other non-volatile storage options for embedded Linux systems include NAND flash and EEPROM. NAND flash is commonly used in consumer electronics due to its high storage capacity, while EEPROM offers low power consumption and a high level of data retention.

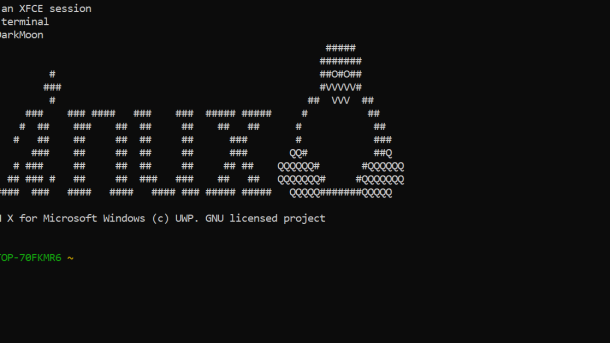

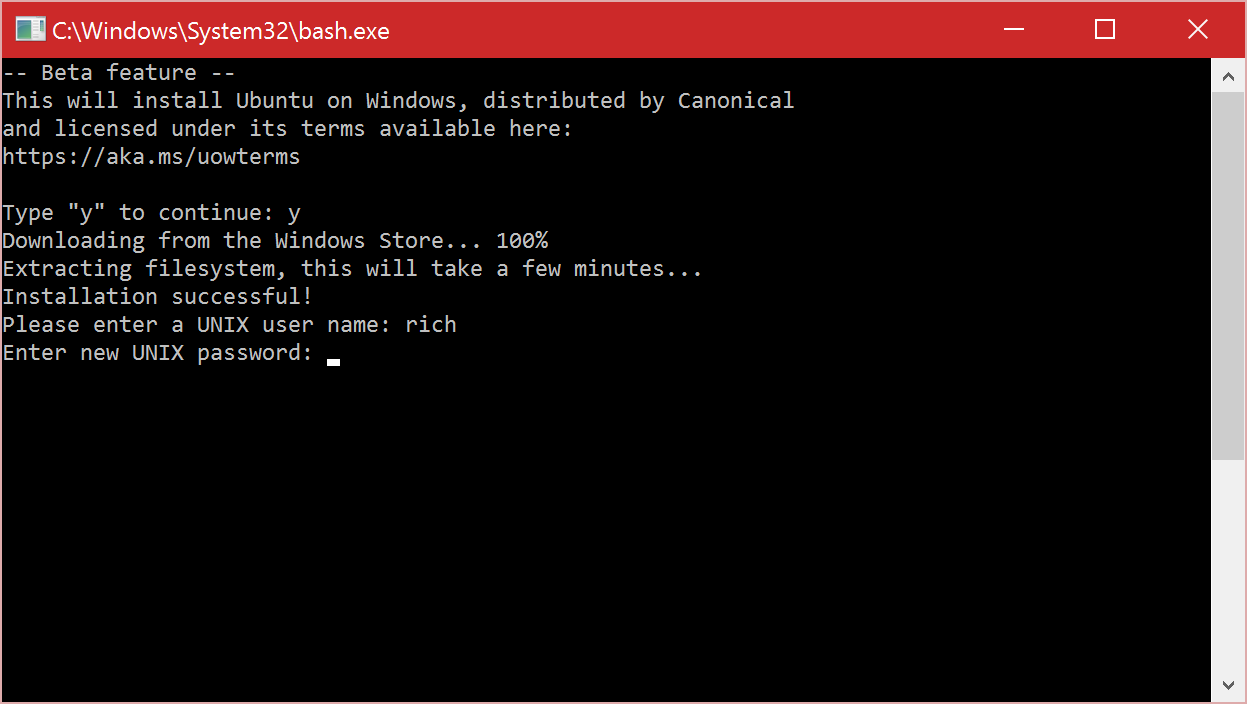

Booting Embedded Linux Systems

Booting Embedded Linux systems can be a complex process, but with the right guidance, it can be easily achieved. One of the first steps in booting an embedded Linux system is to ensure that the bootloader is properly configured. This is essential for loading the Linux kernel and initializing the system.

Next, it is important to set up the kernel parameters correctly to ensure that the system boots smoothly. This includes specifying the root filesystem, the console device, and any other necessary settings for the system to function properly. Additionally, configuring the kernel to support the specific hardware components of the embedded system is crucial for successful booting.

Once the bootloader and kernel parameters are set up, the Linux kernel can be loaded and executed on the embedded system. This process involves loading the kernel image into memory and initializing the system, including setting up the memory management unit, initializing the CPU, and configuring the system devices.

After the kernel is successfully loaded, the root filesystem can be mounted, and the system can be brought up to a fully functional state. This may involve starting essential system services, initializing hardware devices, and launching user applications.

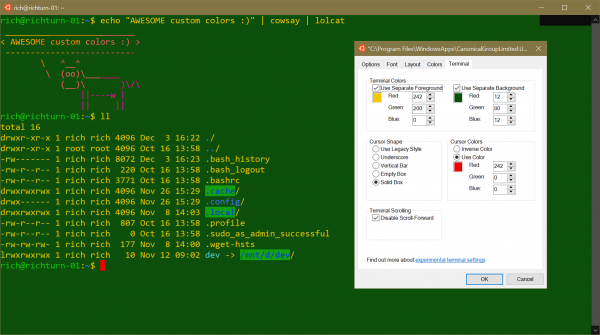

Console UART Configuration

To configure the console UART, you need to access the bootloader or U-Boot environment. Within this environment, you can set the baud rate, data bits, stop bits, and parity for the UART. These configurations ensure that the communication between the embedded device and the computer is reliable and error-free.

Additionally, you may need to enable the console UART in the Linux kernel configuration. This involves selecting the appropriate UART driver for your hardware platform and specifying the console device as the UART port you configured earlier in the bootloader.

After configuring the console UART in the bootloader and kernel, you can establish a serial connection between the embedded device and the computer using a USB-to-serial adapter or other serial communication hardware. This connection allows you to access the Linux console on the embedded device and interact with the system for debugging, troubleshooting, and monitoring purposes.

Properly configuring the console UART is essential for developing and testing embedded Linux systems. It provides a vital link between the embedded device and external systems, enabling effective communication and control over the device’s operation.

Gatekeepers in Embedded Systems

One of the key gatekeepers in Embedded Linux Systems is the **kernel**. The kernel is the core component of the operating system that manages the system resources and provides the necessary interfaces for the applications to interact with the hardware. It enforces security policies, controls access to the system resources, and ensures the stability of the system.

Another important gatekeeper in Embedded Linux Systems is the **device driver**. Device drivers are software components that allow the operating system to communicate with the hardware devices. They play a critical role in ensuring that the hardware devices function properly and that the system can access and control them efficiently.

Gatekeepers in Embedded Systems are essential for ensuring the security, stability, and efficiency of **Linux on embedded systems**.

Signal Integrity Considerations

To maintain signal integrity, it is important to carefully design the layout of the system, paying attention to factors such as trace length, impedance matching, and noise control. Proper grounding and shielding techniques are also essential to minimize signal degradation.

Using high-quality components and following best practices in signal integrity design can help prevent signal distortion, reflection, and crosstalk. This is particularly important in systems where multiple components are interconnected, such as in the Internet of Things (IoT) devices.

By understanding the basics of signal integrity and implementing appropriate measures, developers can ensure that their embedded Linux systems operate efficiently and reliably. It is also advisable to stay updated on advancements in signal integrity technology to continuously improve system performance.

Embedded Linux System Workflow Steps

| Step |

Description |

| 1 |

Choose hardware platform |

| 2 |

Select Linux distribution |

| 3 |

Customize kernel configuration |

| 4 |

Build root filesystem |

| 5 |

Compile and install bootloader |

| 6 |

Test system functionality |

| 7 |

Deploy and monitor system |