Welcome to the world of Docker! In this tutorial, we will explore the basics of Docker on Linux for beginners. Let’s dive in and discover the power of containerization.

Introduction to Docker

Docker is a powerful tool that allows you to easily create, deploy, and run applications in containers. These containers are lightweight, portable, and isolated, making them an ideal solution for software development and deployment.

With Docker, you can package your application along with all its dependencies into a single container, ensuring consistency and reliability across different environments. This eliminates the need to worry about compatibility issues or configuration errors when moving your application from one system to another.

Docker uses OS-level virtualization to create these containers, which are more efficient than traditional hardware virtualization methods. This means that you can run multiple containers on a single host without sacrificing performance or resource utilization.

By leveraging Docker, you can streamline your development process, automate deployment tasks, and improve overall efficiency in managing your applications. Whether you’re a beginner or an experienced developer, learning how to use Docker on Linux can greatly enhance your workflow and productivity.

Understanding Containers

| Container | Description |

|---|---|

| Docker | A platform for developing, shipping, and running applications in containers. |

| Containerization | The process of packaging an application along with its dependencies into a container. |

| Image | A snapshot of a container that includes all dependencies and configurations needed to run the application. |

| Container Registry | A repository for storing and managing container images. |

Benefits of Using Docker

– **Efficiency**: Docker allows for **OS-level virtualization**, which means that it only requires the resources necessary to run the specific application, reducing **overhead** and improving **computer performance**.

– **Portability**: Docker containers can run on any system that supports Docker, whether it’s a **laptop**, a **data center**, or even in the **cloud** on services like **Amazon Web Services**. This makes **software portability** a breeze.

– **Automation**: With Docker, you can easily automate the process of building, testing, and deploying your **application software**. This saves time and ensures **best practices** are followed consistently.

– **Isolation**: Docker containers provide a **sandbox** environment for your applications, ensuring that they are **secure** and don’t interfere with other applications on the same system.

– **Resource Optimization**: Since Docker containers share the same **operating system** kernel, they are more **lightweight** than other forms of **virtualization**, making more efficient use of **computer hardware**.

– **Collaboration**: Docker allows you to easily share your **source code** and **repositories** with others, making **software development** and **engineering** more collaborative and efficient.

– **Flexibility**: Docker provides a **one-stop shop** for packaging and labeling your applications, making it easy to deploy them on any system without worrying about **compatibility issues**.

– **Cost-Effective**: By utilizing Docker, you can save on **infrastructure** costs by running multiple containers on a single **server**, reducing the need for multiple **virtual machines**.

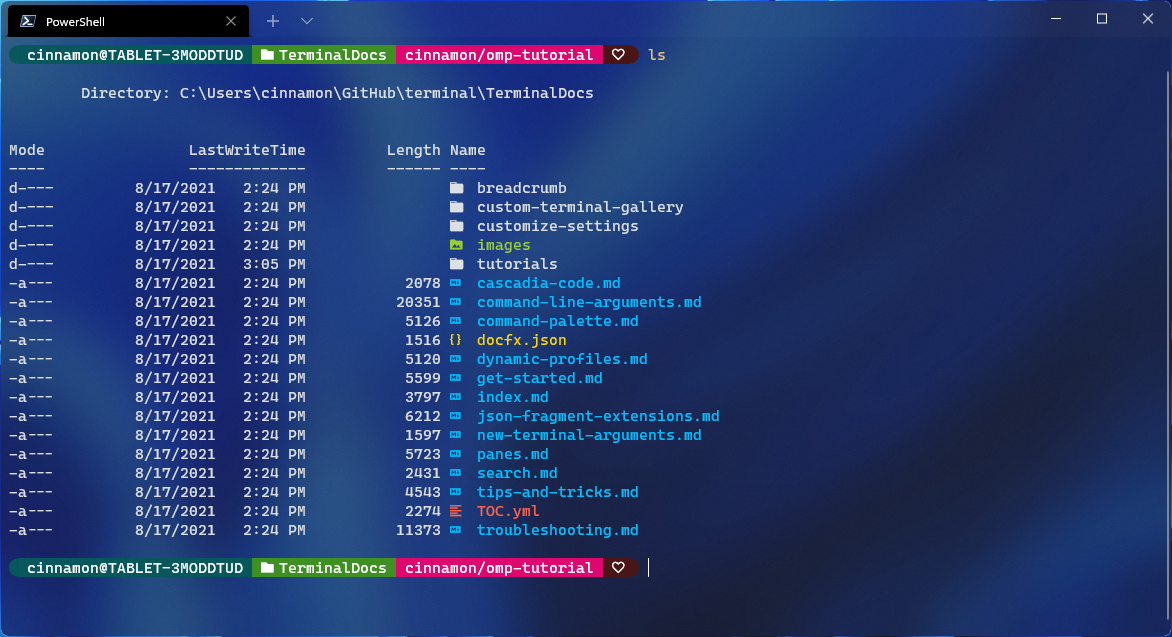

Getting Started with Docker

To **get started with Docker** on Linux, you first need to install Docker on your system. You can follow the official Docker installation guide for Linux to do this. Once Docker is installed, you can start using it to run containers on your machine.

When you run a container using Docker, you are essentially running an isolated instance of an application or service. This allows you to package and run your applications in a consistent and portable way across different environments.

To run a container, you can use the `docker run` command followed by the name of the image you want to run. Docker will then pull the image from the Docker Hub repository, create a container from it, and start it on your system.

You can also create your own Docker images by writing a Dockerfile, which is a text file that contains the instructions for building the image. Once you have created your Dockerfile, you can use the `docker build` command to build the image and then run it as a container.

Docker also provides a number of other useful commands for managing containers, such as `docker ps` to list running containers, `docker stop` to stop a container, and `docker rm` to remove a container.

By learning how to use Docker on Linux, you can take advantage of the benefits of containerization, such as improved efficiency, scalability, and portability for your applications.

Docker Installation and Setup

To install and set up Docker on your Linux system, you can follow these simple steps. First, download Docker from the official website or using the package manager of your Linux distribution. Install Docker by following the installation instructions provided on the Docker website or by running the package manager command. Once Docker is installed, start the Docker service using the command sudo systemctl start docker and enable it to start automatically on boot with sudo systemctl enable docker.

After setting up Docker, you can verify the installation by running the command docker –version to check the version of Docker installed on your system. You can also run docker run hello-world to test if Docker is working correctly. These steps will help you get started with Docker on your Linux system and begin exploring the world of containerization.

Docker Commands and Terminology

When working with Docker on Linux, it’s important to familiarize yourself with some key commands and terminology.

Containers are the running instances of Docker images that encapsulate an application and its dependencies.

To start a container, you can use the docker run command followed by the image name.

To view a list of running containers, you can use the docker ps command.

If you want to stop a container, you can use the docker stop command followed by the container ID or name.

Remember that Docker images are read-only templates that contain the application and its dependencies.

To build a Docker image, you can use the docker build command followed by the path to the Dockerfile.

To push an image to a Docker registry, you can use the docker push command followed by the image name and tag.

These are just a few essential commands and terms to get you started with Docker on Linux.

Building Docker Images

When building a Docker image, each line in the Dockerfile represents a step in the process. You can use commands like RUN, COPY, and **FROM** to build your image layer by layer.

It’s important to optimize your Dockerfile for efficiency and **performance**. This includes minimizing the number of layers, using **alpine** images when possible, and cleaning up unnecessary files.

Once you have your Dockerfile ready, you can build your image using the **docker build** command. This command will read your Dockerfile and create a new image based on the instructions provided.

Remember to tag your image with a **version number** or other identifier to keep track of different versions. This will make it easier to manage and update your images in the future.

Building Docker images is a fundamental skill for anyone working with Docker containers.

Docker Networking and Storage

![]()

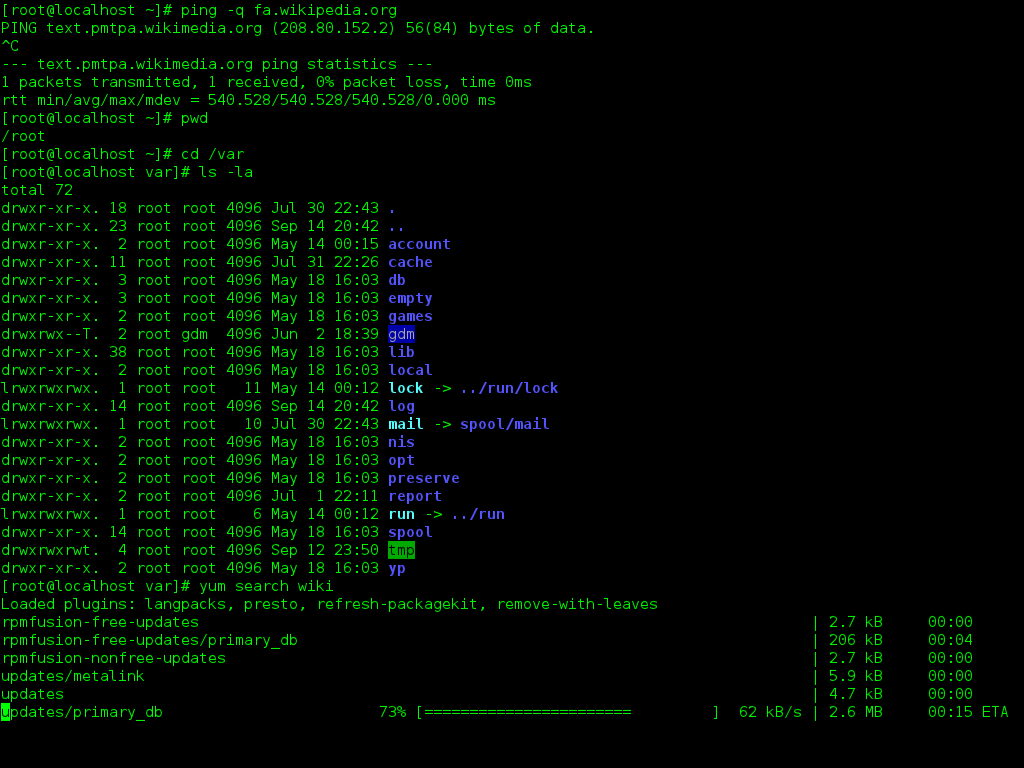

Docker Networking allows containers to communicate with each other and the outside world. By default, Docker creates a bridge network for containers to connect. You can also create custom networks for more control over communication.

Docker Storage enables containers to store data persistently. Docker volumes are the preferred method for data storage, allowing data to be shared between containers. You can also use bind mounts to link container directories to host directories for easy access.

Understanding Docker networking and storage is crucial for managing containers effectively. By mastering these concepts, you can optimize communication between containers and ensure data persistence. Take the time to learn and practice these skills to enhance your Docker experience.

Docker Compose for Multi-container Environments

Docker Compose is a powerful tool for managing multi-container environments in Docker. It allows you to define and run multi-container Docker applications with just a single file. This makes it easier to deploy and scale your applications.

With Docker Compose, you can create a YAML file that defines all the services, networks, and volumes for your application. This file can then be used to start and stop your entire application with a single command.

By using Docker Compose, you can simplify the process of managing complex multi-container environments. This can be especially helpful when working with applications that have multiple components that need to communicate with each other.

Managing Docker Containers

To effectively manage Docker containers in Linux, it is important to understand key commands and concepts. One essential command is docker ps, which lists all running containers. Use docker stop followed by the container ID to stop a running container.

For removing containers, use docker rm followed by the container ID. To view all containers, including stopped ones, use docker ps -a. To start a stopped container, use docker start followed by the container ID.

Managing Docker containers also involves monitoring resource usage. Utilize docker stats to view CPU, memory, and network usage of containers. For troubleshooting, inspect container logs using docker logs followed by the container ID.

By mastering these basic commands, beginners can efficiently manage Docker containers in a Linux environment. Practice using these commands to gain confidence and improve your Docker skills.

Docker Swarm for Orchestration

Docker Swarm is a powerful tool for orchestrating containers in a clustered environment. With Docker Swarm, you can easily manage multiple Docker hosts as a single virtual system.

Orchestration is the process of automating the deployment, scaling, and management of containerized applications. Docker Swarm simplifies this process by providing a centralized way to manage your containers.

To get started with Docker Swarm, you first need to set up a Docker environment on your Linux machine. Once you have Docker installed, you can initialize a Swarm with a single command.

Docker Swarm uses a concept called services to define the tasks that should be executed across the Swarm. You can define services in a Docker Compose file and deploy them to the Swarm with a simple command.

By using Docker Swarm for orchestration, you can easily scale your applications up or down based on demand. This flexibility is essential for cloud computing environments where resources need to be dynamically allocated.

Advantages and Disadvantages of Docker

Advantages of Docker include portability and efficiency. Docker allows developers to package applications and dependencies in containers, making it easy to move them between environments. This can reduce compatibility issues and streamline deployment processes.

Additionally, Docker enables isolation of applications, ensuring that they do not interfere with each other. This can improve security and stability, especially in environments where multiple applications are running simultaneously.

On the other hand, there are some disadvantages to using Docker. One potential drawback is the overhead of running containers, which can impact system performance. Docker containers also require additional resources compared to running applications directly on the host operating system.

Furthermore, managing a large number of containers can be complex and time-consuming. This can lead to challenges in monitoring, scaling, and troubleshooting Dockerized applications.

Docker in Production Environments

One key aspect to consider is the **abstraction** of computing resources, which allows for easy scalability and flexibility in the **data center**. Utilizing **Amazon Elastic Compute Cloud** or **Amazon Web Services** can help streamline the process of deploying and managing your Docker containers.

Automation plays a crucial role in maintaining a reliable production environment. By incorporating **GitHub** or **Git** for version control and **software portability**, you can ensure that your **application software** is always up-to-date and accessible to your team.

Next Steps and Conclusion

Next Steps:

To further your understanding of Docker and Linux, consider exploring more advanced topics such as container orchestration with Kubernetes or integrating Docker into your CI/CD pipeline. Look for online courses or tutorials that delve deeper into these areas.

Consider joining online communities or forums related to Docker and Linux where you can ask questions, share knowledge, and stay updated on the latest trends and best practices in the industry.

Conclusion:

Congratulations on completing this Docker Linux tutorial for beginners! By mastering these foundational concepts, you are well on your way to becoming proficient in containerization and Linux. Keep practicing and experimenting with Docker to solidify your knowledge and skills. Happy coding!