Welcome to the realm of cloud native applications, where innovation and scalability converge to reshape the future of digital landscapes. In this article, we embark on a journey to uncover the intricacies and possibilities of these cutting-edge software solutions, illuminating the path towards a more agile and efficient technological era.

Overview of cloud-native applications

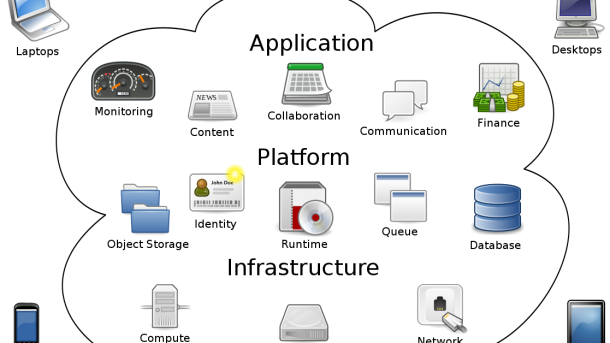

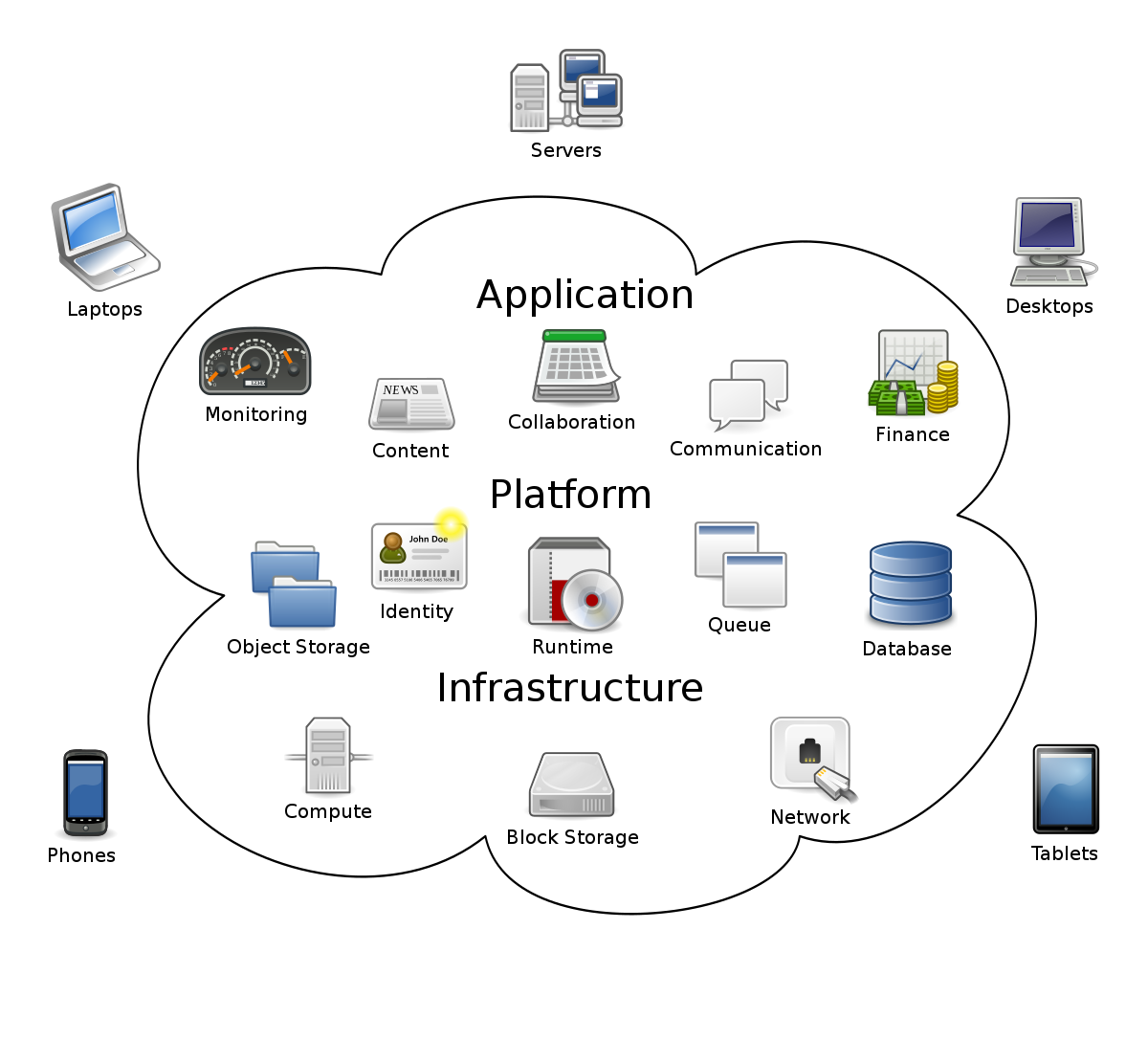

Cloud-native applications are a key component of modern computing. They are designed to take full advantage of cloud computing architecture and enable businesses to achieve greater scalability, flexibility, and efficiency.

At its core, cloud-native applications are built to run on and leverage the capabilities of cloud platforms. This means that they are inherently scalable, allowing businesses to easily accommodate changes in demand without the need for significant infrastructure investments.

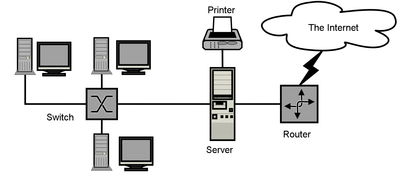

One of the key characteristics of cloud-native applications is their ability to be distributed and run across multiple machines in a computer cluster or network. This allows for improved fault tolerance and reliability, as well as better load balancing and resource management.

Cloud-native applications are also designed to be modular and loosely coupled, which means that individual components can be developed, deployed, and scaled independently. This enables faster innovation and continuous delivery, as well as better management of the application’s lifecycle.

To build and deploy cloud-native applications, businesses often adopt DevOps practices and leverage automation tools. This helps streamline the development and deployment process, reduce errors, and improve overall efficiency.

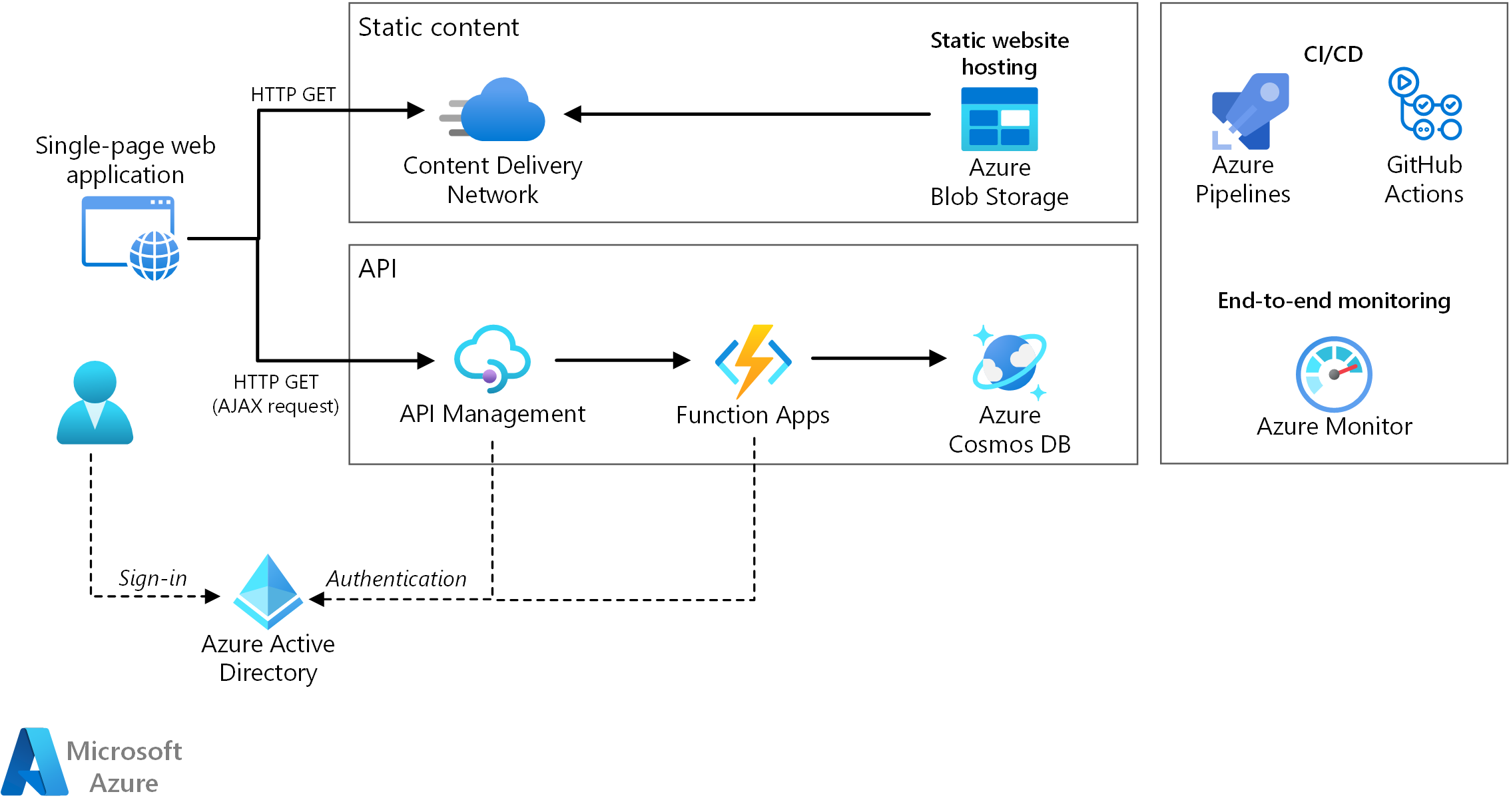

Cloud-native applications also make use of APIs and communication protocols to interact with other applications and services, both within and outside of the cloud environment. This enables seamless integration with existing systems and the ability to easily consume and provide services.

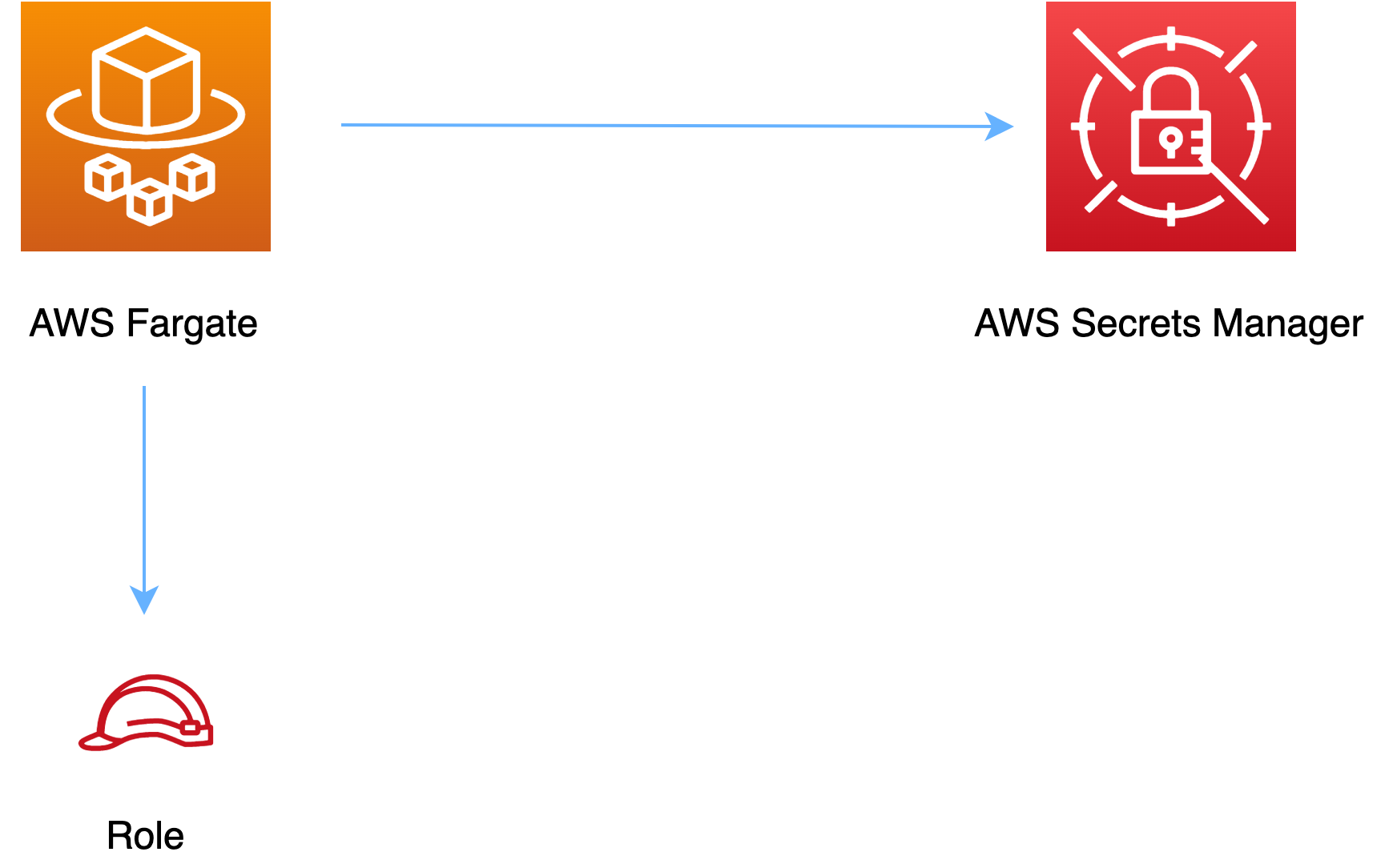

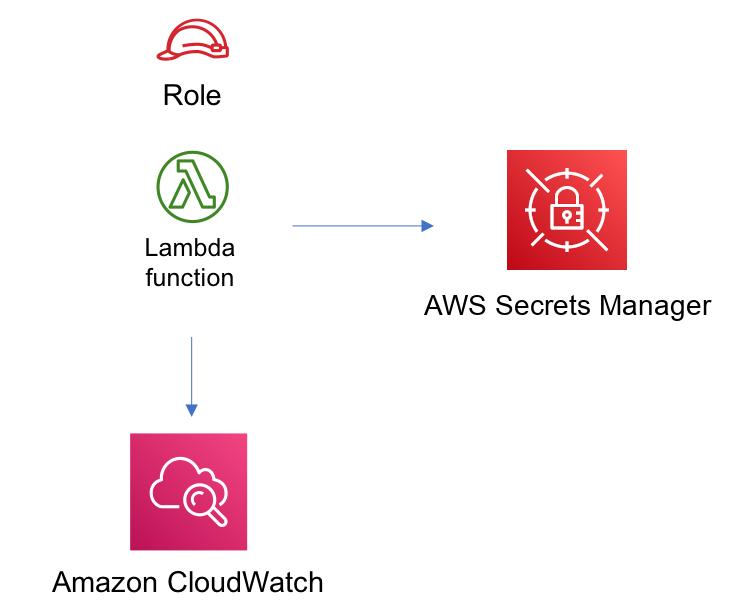

In terms of security, cloud-native applications prioritize the protection of data and resources. They make use of various security measures, such as authentication, encryption, and access controls, to ensure that sensitive information remains secure.

Building cloud-native applications

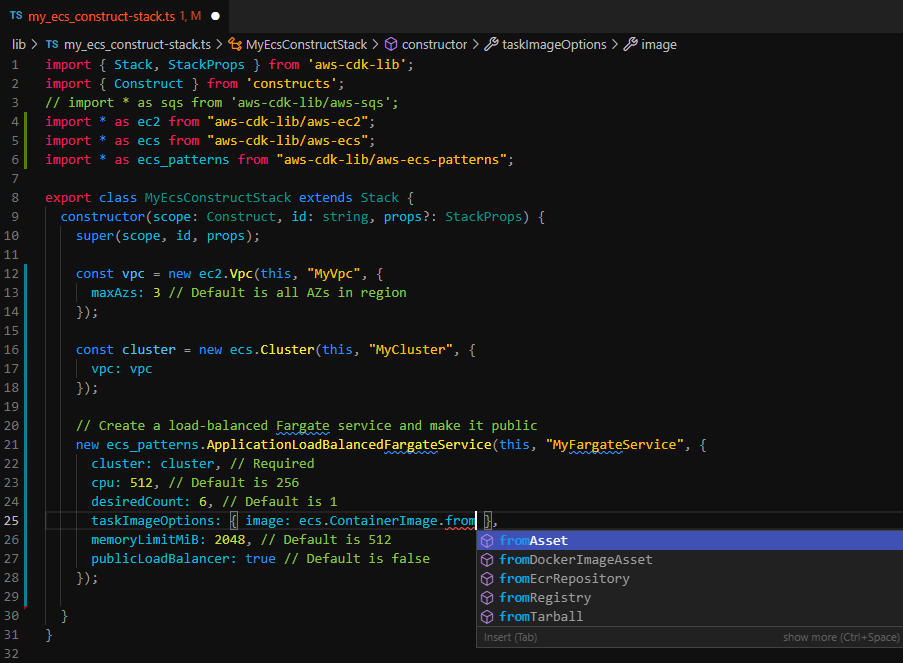

One key aspect of building cloud-native applications is the use of **containerization**. Containers provide a lightweight and portable way to package and distribute applications, making it easier to deploy and manage them across different environments. Containerization also enables **OS-level virtualization**, allowing applications to run in isolated environments without interfering with each other.

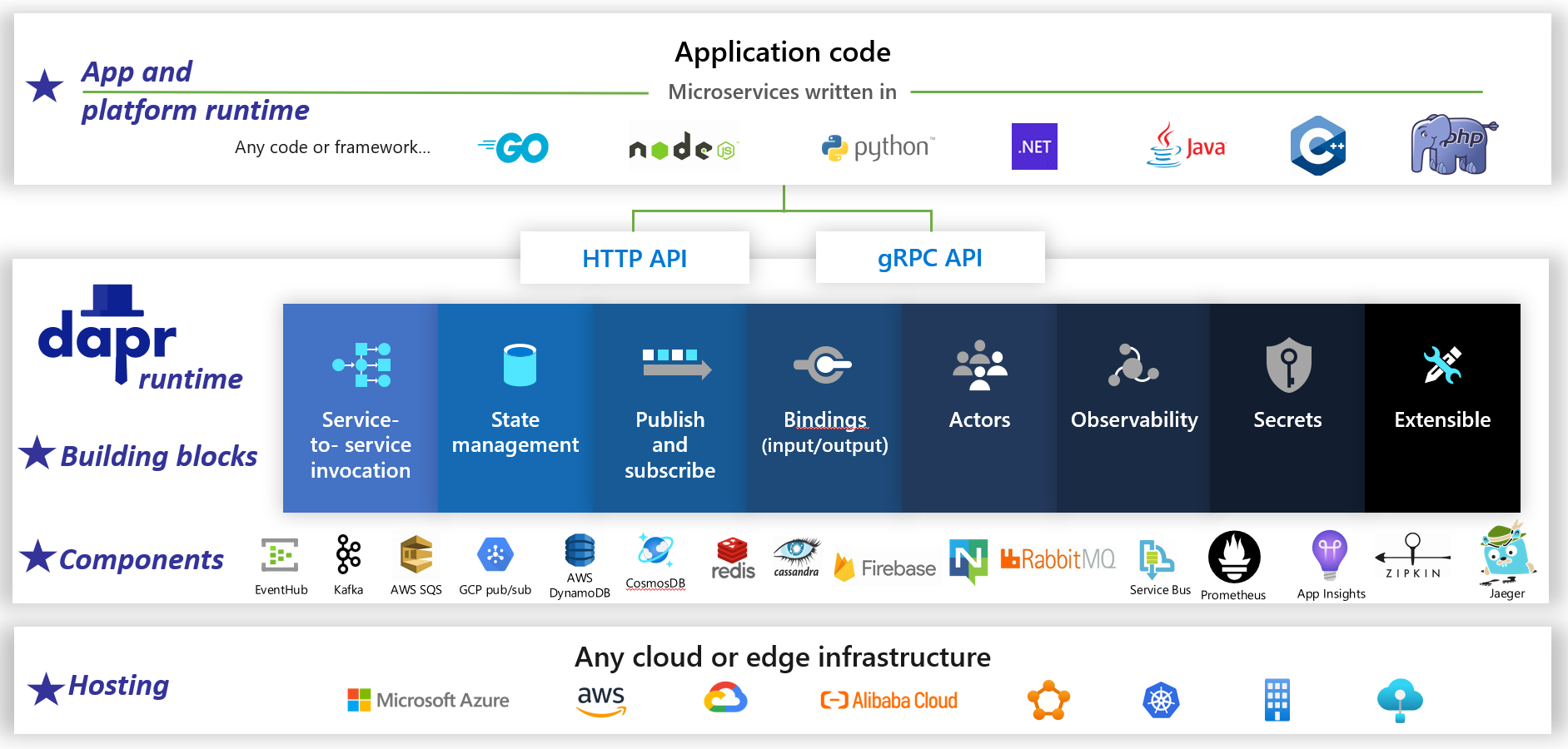

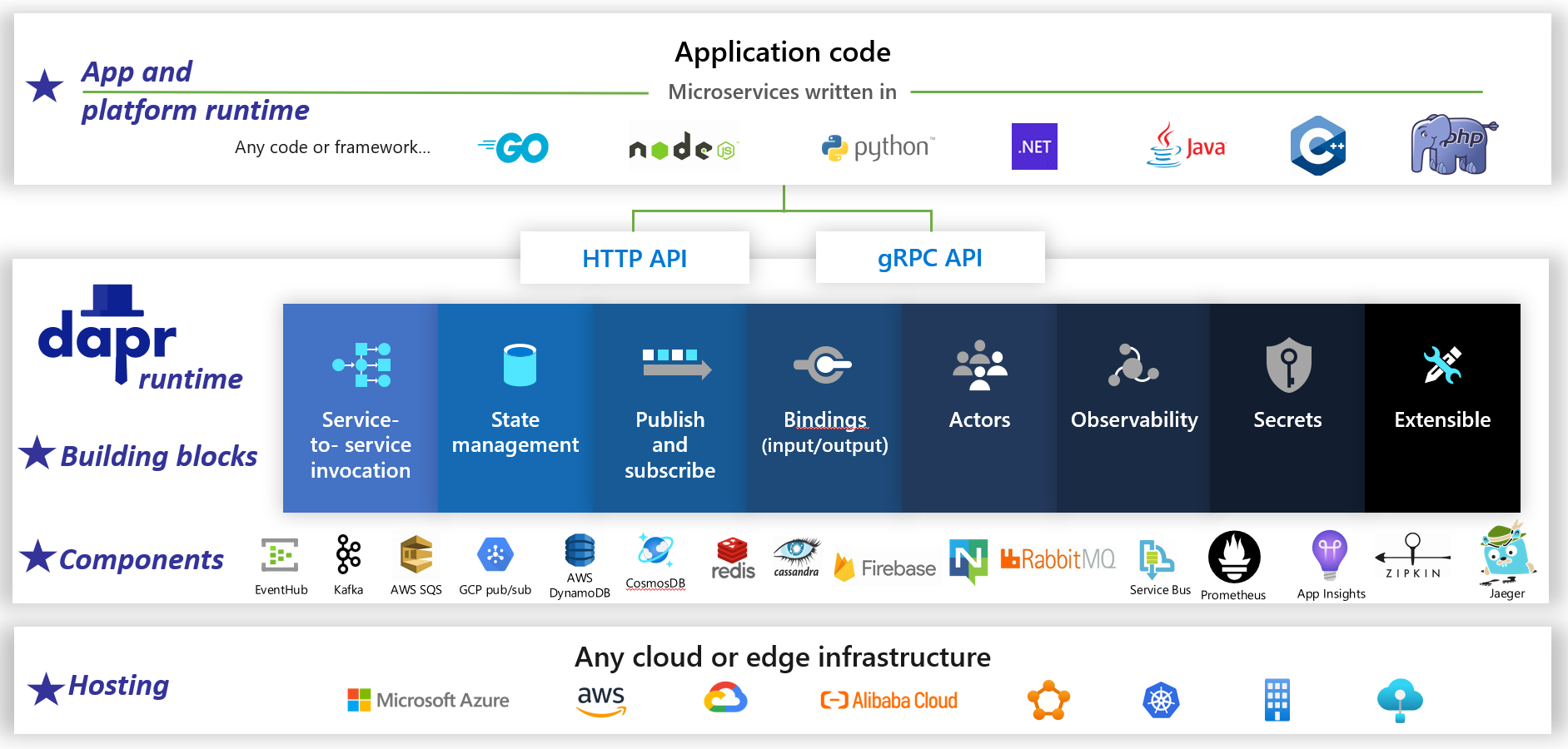

Another important concept in cloud-native development is **microservices**. Instead of building monolithic applications, cloud-native developers break down their applications into smaller, modular services that can be developed, deployed, and scaled independently. This approach promotes **loose coupling** and **modularity**, making it easier to update and maintain the different components of the application.

To ensure that cloud-native applications can handle high traffic and provide a seamless user experience, **scalability** and **fault tolerance** are crucial. Cloud-native applications are designed to automatically scale up or down based on demand, and they are built with **load balancing** and **redundancy** in mind to ensure high availability and minimize downtime.

**Automation** is another fundamental principle of cloud-native development. By automating processes such as deployment, testing, and monitoring, developers can achieve **continuous delivery** and improve the overall speed and efficiency of their application development lifecycle. This is where **DevOps** practices come into play, combining development and operations to streamline the software delivery process.

In addition to these technical considerations, building cloud-native applications also requires a shift in mindset and organizational culture. It involves embracing **self-service** and empowering development teams to take ownership of their applications and infrastructure. This promotes **business agility** and allows organizations to quickly respond to changing market needs and customer demands.

Serverless architecture explained

Serverless architecture is a buzzword in the world of cloud-native computing, and it’s important to understand what it means and how it can benefit your organization.

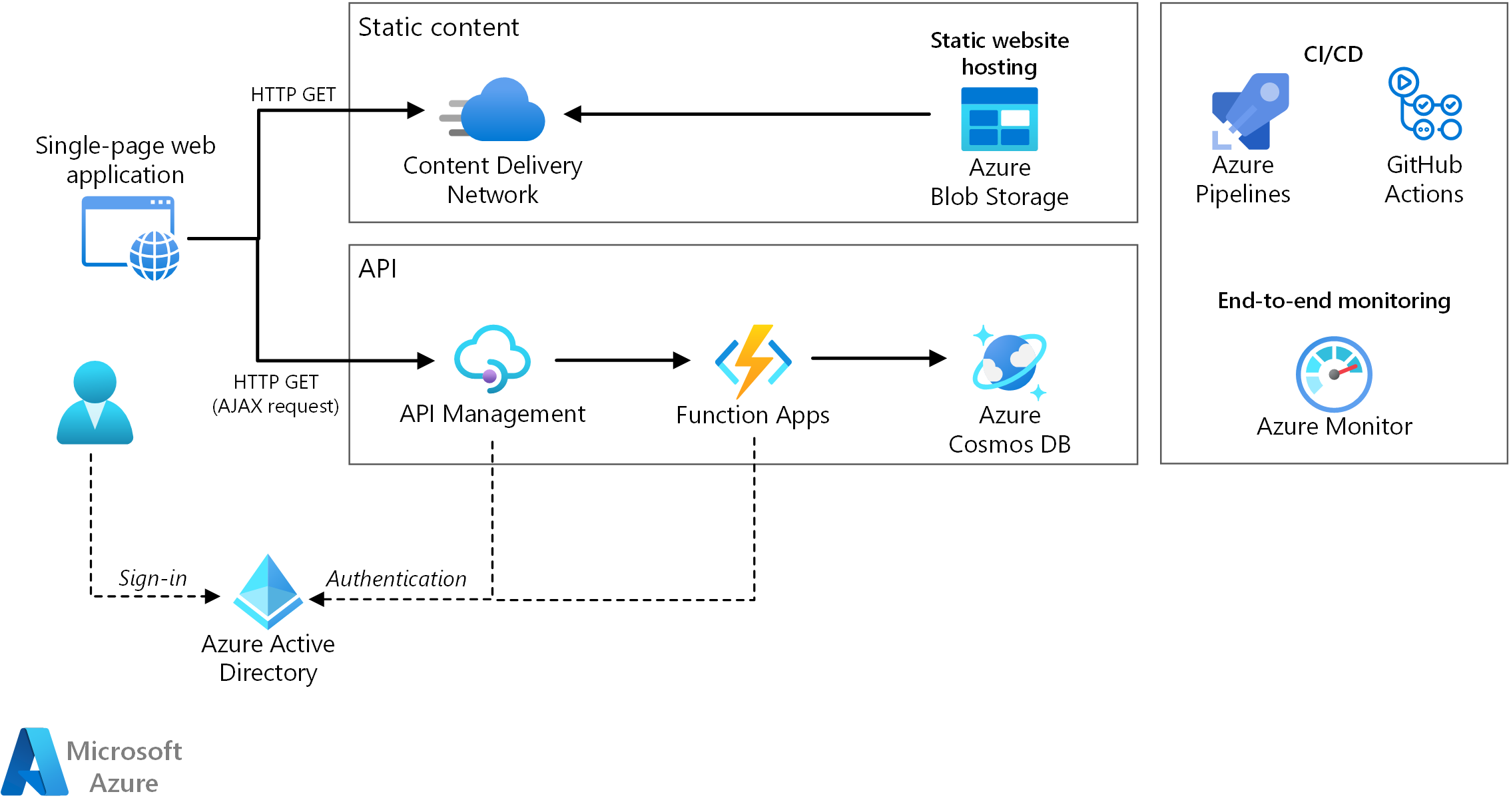

At its core, serverless architecture eliminates the need for you to provision and manage servers. Instead, you can focus on writing and deploying code that runs in response to events or triggers. This means that you can build and scale applications without worrying about the underlying infrastructure.

One of the key benefits of serverless architecture is its ability to provide a highly scalable and elastic environment. With serverless, you can automatically scale your application based on demand, ensuring that you have enough resources to handle peak loads without overprovisioning and wasting resources during quieter periods.

Another advantage of serverless architecture is its ability to improve business agility. By abstracting away the underlying infrastructure, serverless allows developers to focus solely on writing code and delivering value to the business. This can speed up the development process and enable organizations to respond quickly to changing market conditions.

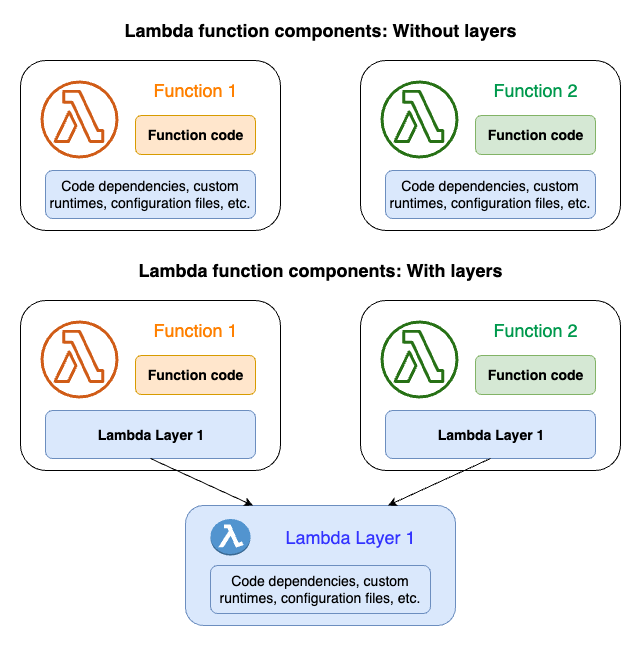

In a serverless architecture, individual functions or services are deployed and run in response to specific events or triggers. These functions can be written in the programming language of your choice and can be easily integrated with other services and APIs. This loose coupling and modularity make it easier to develop, test, and deploy new features and updates to your application.

Serverless architecture also offers inherent benefits in terms of cost savings. With serverless, you only pay for the actual compute time and resources that your code consumes, rather than paying for idle servers or overprovisioning. This can lead to significant cost savings, especially for applications with unpredictable or variable workloads.

In terms of implementation, serverless architecture relies on cloud providers, such as Amazon Web Services (AWS) Lambda or Microsoft Azure Functions, to manage the underlying infrastructure and handle the scaling and execution of your code. These platforms handle tasks such as load balancing, resource management, and orchestration, allowing you to focus on writing code and delivering value to your users.

Cloud-native apps with Red Hat

With cloud-native apps, developers can take advantage of the scalability and flexibility of a computer cluster or network. These apps are designed to be modular, making it easier to update and maintain them. They also utilize APIs for seamless integration with other applications and services.

One of the key benefits of cloud-native apps is load balancing. This ensures that resources are distributed evenly across the cluster, improving performance and preventing any single node from becoming overwhelmed. Provisioning is also simplified, allowing developers to quickly and easily allocate resources as needed.

Cloud-native apps are designed to take advantage of cloud computing architecture, utilizing the internet and self-service interfaces for easy access and management. They are built using application software and programming languages that are compatible with the cloud environment.

Red Hat’s cloud-native apps also leverage OS-level virtualization, allowing for efficient resource allocation and utilization. This ensures that applications run smoothly and are not affected by the underlying hardware.

Throughout the product lifecycle, Red Hat provides support and updates for their cloud-native apps. This ensures that organizations can continually improve their applications and stay up to date with the latest technology.

By using Red Hat for cloud-native app development, organizations can benefit from robust server capabilities, mobile app development tools, and a wide range of software frameworks. This allows for efficient data storage and seamless integration with other systems.

Cloud-native apps with Red Hat also offer advanced networking capabilities, including IP address management and orchestration. This allows for efficient resource allocation and scheduling, reducing the risk of downtime and improving overall performance.

Ultimately, the goal of cloud-native apps with Red Hat is to provide organizations with a scalable and efficient solution for their application development needs. By embracing this technology, organizations can experience the benefits of improved performance, enhanced feedback and the ability to continually improve their applications.

Stateful vs stateless applications

Stateful and stateless applications are two different approaches to designing and building cloud-native applications. Understanding the differences between the two can help guide your decision-making process when developing applications for the cloud.

A stateful application is one that relies on storing and managing data or state information. This data can include user preferences, session information, or any other type of data that needs to be persisted and accessed across multiple requests. Stateful applications typically require a dedicated server or database to store and manage this data.

On the other hand, a stateless application is one that does not rely on storing and managing data or state information. Instead, each request made to a stateless application contains all the necessary information to process the request. This means that stateless applications can be more easily scaled horizontally by adding more servers to handle increased demand.

When deciding between stateful and stateless applications, there are several factors to consider. Stateful applications can provide more flexibility and complex functionality since they can store and access data across multiple requests. However, they can also be more difficult to scale and require more resources to handle increased traffic.

Stateless applications, on the other hand, are easier to scale and require fewer resources since they do not rely on storing and managing data. However, they may be limited in terms of functionality and may require additional mechanisms, such as session tokens or cookies, to maintain user sessions.

Understanding serverless technology

Serverless technology is a key component of cloud-native applications. It allows developers to focus on writing code without worrying about managing servers. With serverless technology, developers can simply upload their code and let the cloud provider handle the rest.

One of the main benefits of serverless technology is its scalability. It allows applications to automatically scale up or down based on demand, ensuring that resources are efficiently used and costs are minimized. This is particularly useful for applications with unpredictable traffic patterns or those that experience sudden spikes in usage.

Another advantage of serverless technology is its cost-effectiveness. Since developers only pay for the actual usage of their code, there is no need to provision and maintain servers that may remain underutilized. This makes serverless technology an attractive option for startups and small businesses with limited resources.

Serverless technology also promotes faster development cycles. Developers can focus solely on writing code and delivering value to users, without the need to worry about infrastructure management. This enables teams to iterate and release new features more quickly, resulting in faster time-to-market.

In addition, serverless technology offers built-in fault tolerance and high availability. Cloud providers automatically replicate and distribute code across multiple data centers, ensuring that applications remain accessible even in the event of a failure. This eliminates the need for developers to implement complex redundancy mechanisms themselves.

To leverage serverless technology effectively, developers should have a solid understanding of Linux. Linux is the operating system of choice for many cloud providers and is often used in the development and deployment of serverless applications. Taking Linux training can provide developers with the necessary skills to navigate and utilize Linux-based environments.

By mastering Linux, developers can confidently work with serverless technology and fully harness its benefits. They will be able to efficiently deploy and manage their applications, optimize resource usage, and troubleshoot any issues that may arise. Linux training can also equip developers with the knowledge to integrate serverless applications with other technologies, such as APIs or cloud storage.

More insights on cloud-native applications

In this article, we will delve deeper into the world of cloud-native applications and provide more insights to help you understand this concept better. We will explore the various aspects of cloud-native applications without getting into unnecessary details or fluff.

Firstly, let’s talk about the importance of cloud-native applications in today’s digital landscape. With the increasing reliance on cloud computing and the need for scalable and flexible solutions, cloud-native applications have become a necessity for businesses. These applications are specifically designed to run on cloud infrastructure, taking advantage of its capabilities such as scalability, resilience, and high availability.

One key aspect of cloud-native applications is their architecture. They are built using microservices, which are small, independent components that work together to perform specific tasks. This modular approach allows for easy maintenance, scalability, and continuous delivery.

Another important aspect is the use of containers. Containers provide a lightweight and portable environment for running applications. They encapsulate all the necessary dependencies, making it easier to deploy applications across different environments. Container orchestration tools like Kubernetes help manage and scale containerized applications efficiently.

Cloud-native applications also rely heavily on APIs (Application Programming Interfaces) for communication between different components. APIs allow different services to interact with each other and share data, enabling seamless integration and collaboration.

One of the key benefits of cloud-native applications is their ability to leverage cloud infrastructure for load balancing and auto-scaling. This ensures that applications can handle increased traffic and demand without any downtime or performance issues.

Additionally, cloud-native applications emphasize automation and self-service capabilities. Through provisioning and orchestration tools, developers can easily deploy and manage applications, reducing manual effort and improving efficiency.

As you can see, cloud-native applications offer numerous advantages for businesses, including improved scalability, resilience, and faster time-to-market. By adopting cloud-native practices and technologies, organizations can accelerate their digital transformation and stay ahead of the competition.

Basics of cloud-native application architecture

Cloud-native application architecture is a fundamental concept in modern software development. It involves designing and building applications specifically for the cloud computing environment. This approach allows for greater scalability, flexibility, and resilience compared to traditional application architectures.

At its core, cloud-native architecture relies on the use of APIs, which are sets of rules and protocols that allow different software applications to communicate with each other. APIs enable seamless integration between different components of the application, such as the frontend and the backend.

Another important aspect of cloud-native architecture is load balancing. This technique distributes incoming network traffic across multiple servers, ensuring that no single server is overwhelmed with requests. Load balancing improves performance and prevents server downtime by distributing the workload evenly.

Provisioning is another key concept in cloud-native architecture. It involves automatically allocating and configuring resources, such as servers, storage, and networking, based on the application’s needs. This allows for the efficient utilization of resources and enables rapid scalability.

Cloud-native applications are designed to be highly available and fault-tolerant. This is achieved through the use of redundancy, which involves duplicating critical components and data across multiple servers. If one server fails, the workload is automatically shifted to another server, ensuring continuous service availability.

Orchestration plays a crucial role in cloud-native architecture. It involves automating the deployment, management, and scaling of application components. Orchestration tools enable developers to define the desired state of the application and automatically handle the necessary changes to achieve that state.

Cloud-native architecture also emphasizes the use of containerization. Containers are lightweight, isolated environments that encapsulate an application and its dependencies. They provide consistent and reproducible environments across different platforms, making it easier to deploy and manage applications.

Cloud-native vs cloud-based apps comparison

| Features |

Cloud-Native Apps |

Cloud-Based Apps |

| Elastic Scalability |

Highly scalable and can automatically adjust resources based on demand |

Scalability depends on the cloud infrastructure provider |

| Microservices Architecture |

Designed to be composed of smaller, independent services that can be deployed and updated individually |

Usually monolithic in nature, with all components tightly coupled |

| Containerization |

Applications are packaged into containers, providing consistency and portability across different environments |

Apps can be hosted on virtual machines or physical servers |

| DevOps Integration |

Emphasizes collaboration and automation between development and operations teams |

Traditional development and operations workflows |

| Resilience |

Designed to handle failures gracefully and recover quickly |

Reliability depends on the cloud infrastructure provider |

| Cloud Dependency |

Can run on any cloud platform or on-premises infrastructure |

Dependent on the cloud infrastructure provider |

The future of cloud-native applications and its impact

The future of cloud-native applications is set to have a significant impact on the technology landscape. As more businesses and organizations migrate their operations to the cloud, the demand for cloud-native applications is rapidly increasing. These applications are specifically designed and built to take full advantage of the cloud computing model, enabling greater scalability, flexibility, and efficiency.

One of the key benefits of cloud-native applications is their ability to leverage the power of computer networks and APIs. By using APIs, these applications can seamlessly integrate with other systems and services, creating a more cohesive and interconnected ecosystem. This allows for easier data sharing, streamlined workflows, and enhanced collaboration across different platforms and devices.

Additionally, cloud-native applications employ load balancing and provisioning techniques to optimize resource allocation and ensure high availability. By distributing workloads across multiple servers, these applications can handle increased traffic and maintain consistent performance even during peak usage periods. This scalability is especially crucial for internet-facing and mobile applications, which often experience fluctuating demand.

Moreover, cloud-native applications rely on communication protocols such as the Internet Protocol (IP) to facilitate data transfer and enable efficient client-server interactions. This ensures that users can access and interact with the application seamlessly, regardless of their location or device.

Another important aspect of cloud-native applications is their ability to adapt and evolve throughout their lifecycle. These applications are designed with modularity and flexibility in mind, making it easier to update and enhance different components without disrupting the entire system. This enables businesses to respond quickly to changing market demands and deliver new features to users more efficiently.

To build and deploy cloud-native applications, developers rely on various tools, frameworks, and services provided by cloud providers. These tools enable efficient code development, testing, and deployment, while also providing monitoring and management capabilities.

However, it is important to note that transitioning to cloud-native applications also comes with risks. Network planning and design, as well as ensuring appropriate levels of security and redundancy, are essential to mitigate potential vulnerabilities and ensure business continuity.