Welcome to our comprehensive guide on Oracle Cloud Infrastructure (OCI), where we will explore everything you need to know about this powerful cloud platform.

Cloud Deployment Options

With OCI, you can also take advantage of **supercomputer** capabilities, **artificial intelligence** tools, and **big data** analytics. Whether you’re looking to deploy **software as a service** or need a **data warehouse** solution, OCI has you covered.

By choosing OCI Cloud, you can benefit from the **security** and **reliability** of Oracle Corporation, while also enjoying **scalability** and **flexibility** for your computing needs.

Cloud Infrastructure Services

| Service |

Description |

| Compute |

Provides virtual machines and bare metal instances to run any workload in the cloud. |

| Storage |

Offers scalable and secure storage options including block, object, and file storage. |

| Networking |

Enables users to create and manage virtual networks, load balancers, and VPN connections. |

| Database |

Provides managed database services including Autonomous Database and MySQL. |

| Security |

Offers advanced security features such as encryption, identity and access management, and security monitoring. |

Developer Tools and Services

Whether you’re a data scientist working on big data projects or a developer building microservices, OCI has the tools to support your work. With support for machine learning, artificial intelligence, and data warehouses, OCI is a comprehensive platform for all your computing needs. Explore OCI’s pricing options to find a plan that fits your budget and requirements. Start your journey with OCI today and discover the power of Oracle Cloud Infrastructure.

Data Management Solutions

Data Management Solutions in OCI Cloud involve efficient organization, storage, and processing of vast amounts of data. Using Oracle Database and Oracle Exadata, businesses can securely manage their data with high performance. Oracle Cloud Infrastructure offers a range of tools and services for data management, including data warehouse solutions, analytics platforms, and machine learning capabilities. By leveraging OCI Cloud for data management, organizations can improve business intelligence and make informed decisions based on accurate insights.

Additionally, OCI Cloud provides scalability, security, and reliability for handling data, ensuring seamless operations for businesses of all sizes.

AI and Machine Learning Capabilities

AI and Machine Learning capabilities are essential components of Oracle Cloud Infrastructure (OCI). With OCI, you can leverage Oracle Corporation’s cutting-edge technology to enhance your business operations. Whether you require supercomputer power for complex analysis or generative artificial intelligence for innovative solutions, OCI has you covered. The platform offers seamless integration with various tools like Apache Hadoop and Apache Spark, making it easier to work with your data. Additionally, OCI provides reliable cloud storage options and supports multicloud environments for greater flexibility. Take advantage of OCI’s AI and Machine Learning capabilities to propel your business forward in today’s competitive landscape.

Security and Compliance Features

OCI also provides compliance certifications and audits to meet industry standards and regulations, giving you peace of mind when it comes to data security. Additionally, OCI offers secure networking options, allowing you to create isolated environments for your workloads and control access through network security groups.

By leveraging OCI’s security and compliance features, you can confidently store your data in the cloud while adhering to strict security protocols and regulations. Oracle’s commitment to data protection and integrity makes OCI a reliable choice for businesses looking to migrate to the cloud.

Cost Management and Governance Tools

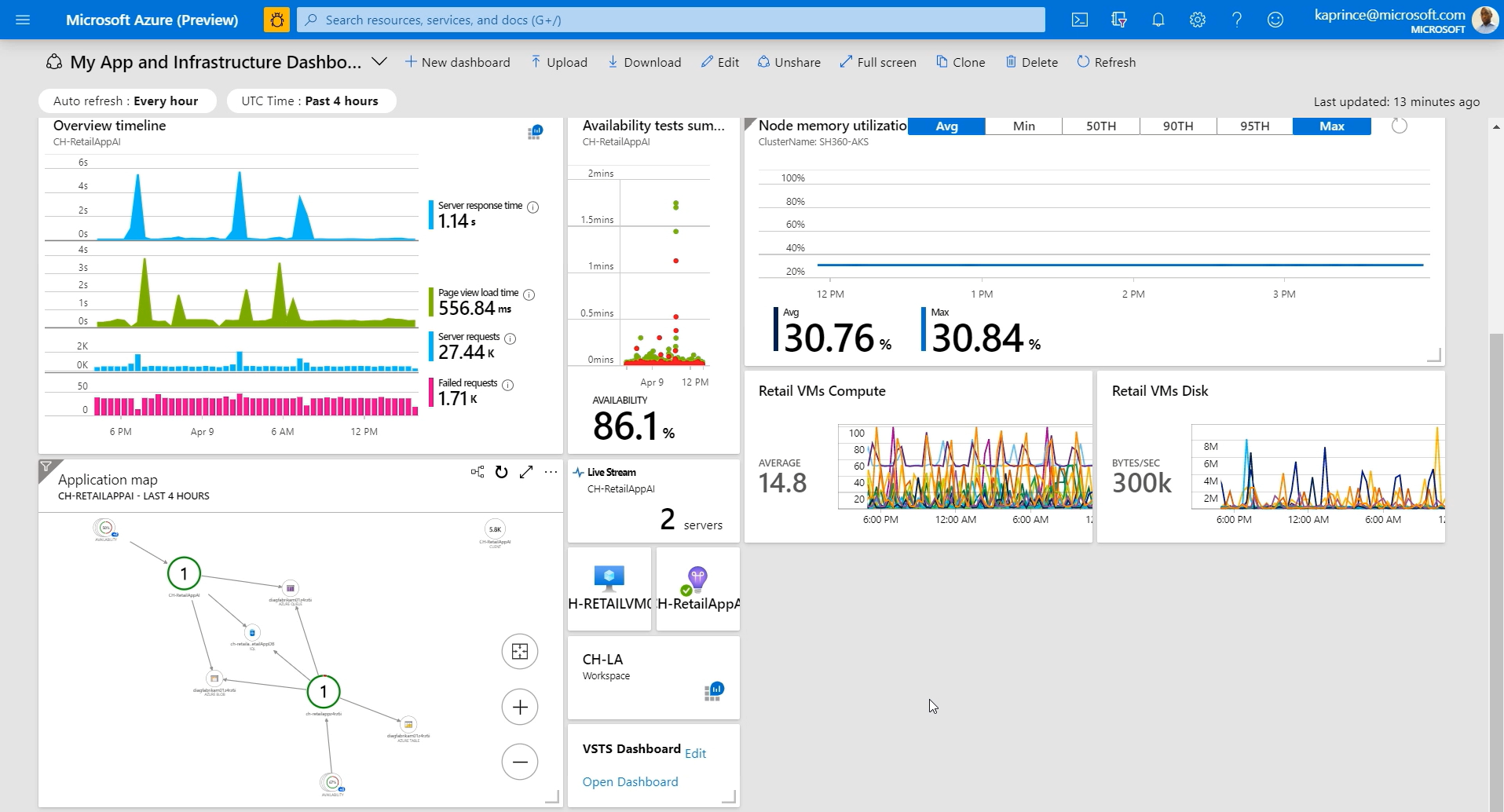

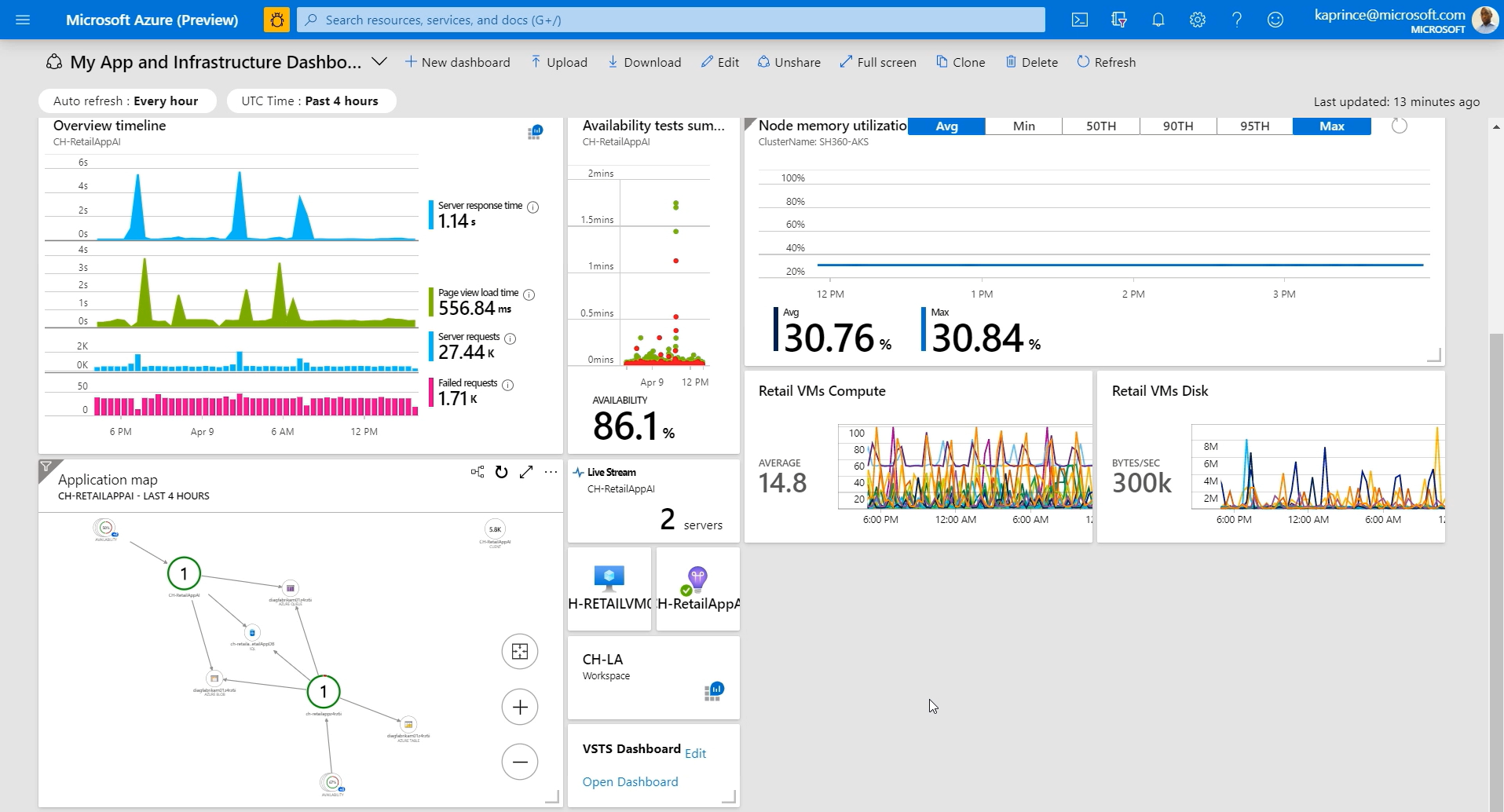

Using OCI’s command-line interface and dashboard (business), you can monitor your usage in real-time, track costs, and set budgets to ensure you stay within your financial targets. Additionally, Oracle Cloud Infrastructure provides detailed reports and analysis that help you make informed decisions about resource allocation and optimization.

By leveraging OCI’s automation capabilities, you can streamline cost management processes and eliminate manual tasks, saving time and resources. With features like multicloud support and network virtualization, you can easily integrate Oracle Cloud Infrastructure with your existing systems and maximize efficiency across your organization.

Take advantage of Oracle’s industry-leading expertise in cloud computing and business intelligence to drive cost-effective strategies and achieve your goals with confidence. Oracle Cloud Infrastructure offers a comprehensive suite of tools and resources to help you navigate the complexities of cost management and governance in the cloud, empowering you to optimize your operations and maximize your ROI.

Free Tier Benefits

Whether you are looking to deploy applications, run workloads, or experiment with new technologies like Kubernetes or Apache Hadoop, the Free Tier provides a risk-free environment to test out different solutions. Additionally, the inclusion of services like Oracle Database, Apache Spark, and Elasticsearch allows for a comprehensive exploration of various data management and analytics tools.

By taking advantage of the Free Tier Benefits, users can gain hands-on experience with cloud computing, build their skills in areas like data science and DevOps, and even develop and deploy applications using tools like Docker and Kubernetes. This valuable resource opens up a world of possibilities for those looking to expand their knowledge and expertise in the realm of cloud technology.

Autonomous Database Quick Start

The Autonomous Database Quick Start feature in Oracle Cloud Infrastructure (OCI) allows for a seamless and efficient setup process for users. By utilizing this tool, individuals can easily launch and manage their databases with minimal effort and maximum efficiency. This feature streamlines the process of database creation and maintenance, saving time and resources for users. Whether you are new to OCI or a seasoned user, the Autonomous Database Quick Start feature is a valuable tool that simplifies database management tasks. Take advantage of this feature to kickstart your database projects and streamline your workflow.

Popular Application Architectures

Containerization tools like **Docker** have gained popularity for their ability to package applications and their dependencies in a portable format. This ensures consistency between development, testing, and production environments.

**Kubernetes** is another popular tool for managing containerized applications at scale. It automates deployment, scaling, and management of containerized applications in a **cloud-native** environment.

These architectures are particularly well-suited for **cloud environments**, offering benefits such as scalability, flexibility, and cost-efficiency. Oracle Cloud Infrastructure (OCI) provides a platform for running these modern application architectures with features like **bare-metal servers**, **virtual machines**, and **cloud storage**.

Oracle Cloud Infrastructure Events

Whether you are interested in Microsoft Azure integration, data science applications, or automation tools, Oracle Cloud Infrastructure Events cover a wide range of topics to meet your needs. Stay updated on the latest developments in computer hardware, network virtualization, and software as a service through engaging sessions and workshops.

Take advantage of the opportunity to interact with industry leaders such as Larry Ellison and Satya Nadella, and gain valuable insights into the future of cloud technology. Whether you are a beginner or an experienced professional, Oracle Cloud Infrastructure Events offer something for everyone looking to explore the possibilities of cloud computing.

Don’t miss out on the chance to expand your knowledge, enhance your skills, and stay ahead of the curve in the rapidly evolving world of cloud computing with Oracle Cloud Infrastructure Events.

Reasons to Use Oracle Cloud

– Reliability: Oracle Cloud Infrastructure (OCI) offers a highly reliable platform for your business needs, with a guaranteed uptime SLA of 99.95%. This ensures that your applications and data are always available when you need them.

– Performance: OCI provides high-performance computing capabilities, with options for both bare-metal servers and virtual machines. This allows you to run even the most demanding workloads with ease, whether you’re leveraging the power of Nvidia GPUs or running complex microservices architectures.

– Security: Oracle takes security seriously, with multiple layers of security measures in place to protect your data. From encryption at rest and in transit to granular access controls, you can trust that your information is safe in the Oracle Cloud.

– Cost-Efficiency: With competitive pricing options and a pay-as-you-go model, OCI allows you to scale your infrastructure as needed without breaking the bank. Whether you’re a small startup or a large enterprise, Oracle Cloud offers cost-effective solutions for your business.

Digital Transformation Benefits

– **Increased Efficiency**: With Oracle Cloud Infrastructure (OCI), businesses can streamline their operations and reduce manual processes through automation and advanced analytics.

– **Cost Savings**: OCI offers a pay-as-you-go model, allowing organizations to scale resources up or down based on their needs, ultimately saving on infrastructure costs.

– **Enhanced Security**: OCI provides top-notch security protocols, ensuring that data and applications are protected from potential threats and breaches.

– **Scalability**: Businesses can easily scale their operations with OCI, whether they need additional storage, computing power, or network resources.

– **Improved Performance**: OCI’s high-performance computing capabilities enable faster processing speeds and enhanced user experiences.

– **Flexibility**: OCI offers a wide range of services and tools, allowing businesses to customize their cloud environment to meet their specific requirements.

Application Support and Performance

With OCI’s focus on performance optimization, you can trust that your applications will deliver the speed and reliability your business demands. Whether you’re running on a **bare-metal server** or a **virtual machine**, OCI’s advanced network capabilities and support for technologies like **Nvidia GPUs** ensure that your applications perform at their best.

By leveraging OCI’s **command-line interface** and powerful tools, you can easily monitor and manage your applications to ensure they meet your performance goals. With OCI’s commitment to innovation and cutting-edge technology, you can stay ahead of the curve and drive your business forward.