In this tutorial, we will explore the steps to install Kubernetes on RedHat Linux, enabling you to efficiently manage containerized applications on your system.

Understanding Kubernetes Architecture

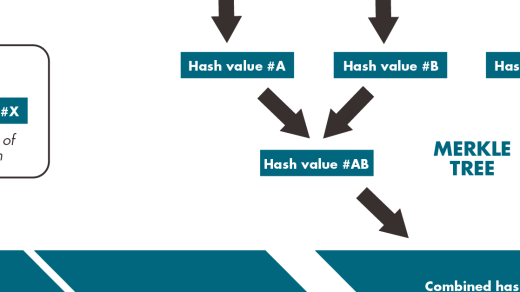

Kubernetes architecture consists of two main components: the **control plane** and the **nodes**. The control plane manages the cluster, while nodes are the worker machines where applications run. It’s crucial to understand how these components interact to effectively deploy and manage applications on Kubernetes.

The control plane includes components like the **kube-apiserver**, **kube-controller-manager**, and **kube-scheduler**. These components work together to maintain the desired state of the cluster and make decisions about where and how applications should run. On the other hand, nodes run the applications and are managed by the control plane.

When installing Kubernetes on RedHat Linux, you will need to set up both the control plane and the nodes. This involves installing container runtime like Docker, configuring the control plane components, and joining nodes to the cluster. Additionally, using tools like **kubectl** and **kubeconfig** files will help you interact with the cluster and deploy applications.

Understanding Kubernetes architecture is essential for effectively managing containerized applications. By grasping the roles of the control plane and nodes, you can optimize your deployment strategies and ensure the scalability and reliability of your applications on Kubernetes.

Starting and Launching Kubernetes Pods

To start and launch Kubernetes Pods on RedHat Linux, you first need to have Kubernetes installed on your system. Once installed, you can create a Pod by defining a YAML configuration file with the necessary specifications. Use the kubectl command to apply this configuration file and start the Pod.

Ensure that the Pod is successfully launched by checking its status using the kubectl command. You can also view logs and details of the Pod to troubleshoot any issues that may arise during the launch process.

To manage multiple Pods or deploy applications on a larger scale, consider using tools like OpenShift or Ansible for automation. These tools can help streamline the process of starting and launching Pods in a computer cluster environment.

Exploring Kubernetes Persistent Volumes

To explore **Kubernetes Persistent Volumes** on RedHat Linux, first, you need to understand the concept of persistent storage in a Kubernetes cluster. Persistent Volumes allow data to persist beyond the life-cycle of a pod, ensuring that data is not lost when a pod is destroyed.

Installing Kubernetes on RedHat Linux involves setting up **Persistent Volumes** to store data for your applications. This can be done by defining Persistent Volume Claims in your Kubernetes YAML configuration files, specifying the storage class and access mode.

You can use various storage solutions like NFS, iSCSI, or cloud storage providers to create Persistent Volumes in Kubernetes. By properly configuring Persistent Volumes, you can ensure data replication, backup, and access control for your applications.

Managing Kubernetes SELinux Permissions

When managing **Kubernetes SELinux permissions** on **RedHat Linux**, it is crucial to understand how SELinux works and how it can impact your Kubernetes installation.

To properly manage SELinux permissions, you will need to configure the necessary **security contexts** for Kubernetes components such as **pods**, **services**, and **persistent volumes**. This involves setting appropriate SELinux labels on files and directories.

It is important to regularly audit and troubleshoot SELinux denials to ensure that your Kubernetes cluster is running smoothly and securely. Tools such as **audit2allow** can help generate SELinux policies to allow specific actions.

Configuring Networking for Kubernetes

To configure networking for **Kubernetes** on **RedHat Linux**, you need to start by ensuring that the host machine has the necessary network settings. This includes setting up a **static IP address** and configuring the **DNS resolver** to point to the correct servers.

Next, you will need to configure the **network plugin** for Kubernetes, such as **Calico** or **Flannel**, to enable communication between pods and nodes. These plugins help manage network policies and provide connectivity within the cluster.

You may also need to adjust the **firewall settings** to allow traffic to flow smoothly between nodes and pods. Additionally, setting up **ingress controllers** can help manage external access to your Kubernetes cluster.

Installing CRI-O Container Runtime

To install CRI-O Container Runtime on RedHat Linux, begin by updating the system using the package manager, such as DNF. Next, enable the necessary repository for CRI-O installation. Install the cri-o package using the package manager, ensuring all dependencies are met.

After installation, start the CRI-O service using Systemd and enable it to run on system boot. Verify the installation by checking the CRI-O version using the command-line interface. You can now proceed with setting up Kubernetes on your RedHat Linux system with CRI-O as the container runtime.

Keep in mind that CRI-O is a lightweight alternative to Docker for running containers in a Kubernetes environment. It is designed specifically for Kubernetes and offers better security and performance.

Creating a Kubernetes Cluster

To create a Kubernetes cluster on RedHat Linux, start by installing Docker and Kubernetes using the RPM Package Manager. Next, configure the Kubernetes master node by initializing it with the ‘kubeadm init’ command. Join worker nodes to the cluster using the ‘kubeadm join’ command with the token generated during the master node setup.

Ensure that the necessary ports are open on all nodes for communication within the cluster. Use Ansible for automation and to manage the cluster configuration. Verify the cluster status using the ‘kubectl get nodes’ command and deploy applications using YAML files.

Monitor the cluster using the Kubernetes dashboard or command-line interface. Utilize features like replication controllers, pods, and services for managing applications. Regularly update the cluster components and apply security patches to keep the cluster secure.

Setting up Calico Pod Network Add-on

To set up the Calico Pod Network Add-on on Kubernetes running on Redhat Linux, start by ensuring that the Calico node image is available on your system. Next, edit the configuration file on your master node to include the necessary settings for Calico.

After configuring the master node, proceed to configure the worker nodes by running the necessary commands to join them to the Calico network. Once all nodes are connected, verify that the Calico pods are running correctly on each node.

Finally, test the connectivity between pods on different nodes to confirm that the Calico network is functioning as expected. With these steps completed, your Kubernetes cluster on RedHat Linux should now be utilizing the Calico Pod Network Add-on for efficient communication between pods.

Joining Worker Node to the Cluster

To join a Worker Node to the Cluster in RedHat Linux, you first need to have Kubernetes installed. Once Kubernetes is up and running on your Master System, you can start adding Worker Nodes to the cluster.

To join a Worker Node, you will need to use the kubeadm tool. This tool will help you configure and manage your Worker Nodes efficiently.

Make sure your Worker Node meets the minimum requirements, such as having at least 2GB of RAM and a compatible operating system.

Follow the step-by-step instructions provided by Kubernetes documentation to successfully add your Worker Node to the cluster.

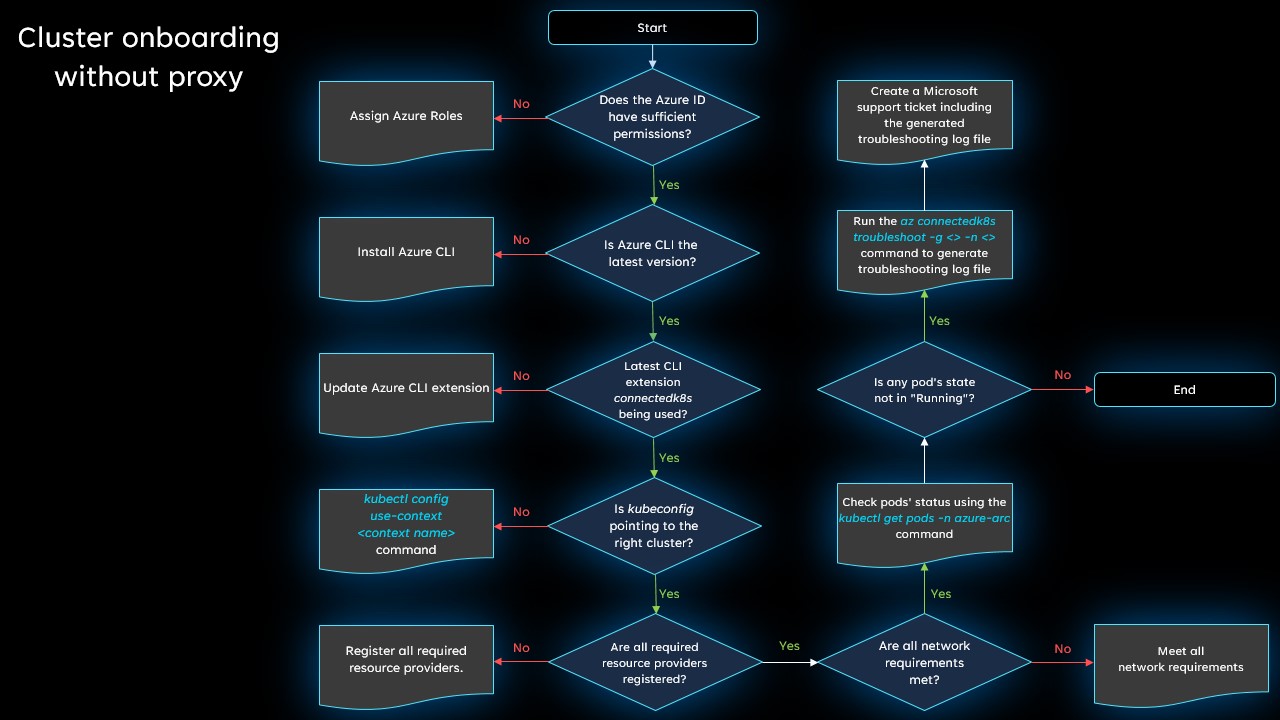

Troubleshooting Kubernetes Installation

To troubleshoot Kubernetes installation on RedHat Linux, first, check if all the necessary dependencies are installed and properly configured. Ensure that the Docker software is correctly set up and running. Verify that the Kubernetes software repository is added to the system and the correct versions are being used.

Check the status of the Kubernetes master and worker nodes using the “kubectl get nodes” command. Make sure that the nodes are in the “Ready” state and all services are running properly. If there are any issues, look for error messages in the logs and troubleshoot accordingly.

If the installation is still not working, try restarting the kubelet and docker services using the “systemctl restart kubelet” and “systemctl restart docker” commands. Additionally, check the firewall settings to ensure that the necessary ports are open for Kubernetes communication.

If you encounter any errors during the installation process, refer to the official Kubernetes documentation or seek help from the community forums. Troubleshooting Kubernetes installation on RedHat Linux may require some technical knowledge, so don’t hesitate to ask for assistance if needed.

Preparing Containerized Applications for Kubernetes

To prepare containerized applications for Kubernetes on RedHat Linux, start by ensuring that your system meets the necessary requirements. Install and configure Docker for running containers, as Kubernetes relies on it for container runtime. Next, set up a Kubernetes cluster using tools like Ansible or OpenShift to automate the process.

Familiarize yourself with systemd for managing services in RedHat Linux, as Kubernetes components are typically run as system services. Utilize the RPM Package Manager to install Kubernetes components from the official software repository. Make sure your server has access to the Internet to download necessary packages and updates.

Configure your RedHat Linux server to act as a Kubernetes master node by installing the required components. Set up worker nodes to join the cluster, allowing for distributed computing across multiple machines. Follow best practices for securing your Kubernetes cluster, such as restricting access to the API server and enabling replication for high availability.

Regularly monitor the health and performance of your Kubernetes cluster using tools like Prometheus and Grafana. Stay updated on the latest Kubernetes releases and apply updates as needed to ensure optimal performance. With proper setup and maintenance, your containerized applications will run smoothly on Kubernetes in a RedHat Linux environment.

Debugging and Inspecting Kubernetes

To properly debug and inspect **Kubernetes** on **RedHat Linux**, you first need to ensure that you have the necessary tools and access levels. Make sure you have **sudo** privileges to make system-level changes.

Use **kubectl** to interact with the Kubernetes cluster and inspect resources. Check the status of pods, services, and deployments using **kubectl get** commands.

For debugging, utilize **kubectl logs** to view container logs and troubleshoot any issues. You can also use **kubectl exec** to access a running container and run commands for further investigation.

Additionally, you can enable **debugging** on the **Kubernetes master node** by setting the appropriate flags in the kube-apiserver configuration. This will provide more detailed logs for troubleshooting purposes.

Troubleshooting Kubernetes systemd Services

When troubleshooting **Kubernetes systemd services** on RedHat Linux, start by checking the status of the systemd services using the `systemctl status` command. This will provide information on whether the services are active, inactive, or have encountered any errors.

If the services are not running as expected, you can try restarting them using the `systemctl restart` command. This can help resolve issues related to the services not starting properly.

Another troubleshooting step is to review the logs for the systemd services. You can view the logs using the `journalctl` command, which will provide detailed information on any errors or warnings encountered by the services.

If you are still experiencing issues with the systemd services, you may need to dive deeper into the configuration files for Kubernetes on RedHat Linux. Make sure all configurations are set up correctly and are in line with the requirements for running Kubernetes.

Troubleshooting Techniques for Kubernetes

– When troubleshooting Kubernetes on RedHat Linux, one common issue to check is the status of the kubelet service using the systemctl command. Make sure it is running and active to ensure proper functioning of the Kubernetes cluster.

– Another useful technique is to inspect the logs of the Kubernetes components such as kube-scheduler, kube-controller-manager, and kube-apiserver. This can provide valuable insights into any errors or issues that may be affecting the cluster.

– If you encounter networking problems, check the status of the kube-proxy service and ensure that the networking plugin is properly configured. Issues with network connectivity can often cause problems in Kubernetes clusters.

– Utilizing the kubectl command-line tool can also be helpful in troubleshooting Kubernetes on RedHat Linux. Use commands such as kubectl get pods, kubectl describe pod, and kubectl logs to gather information about the state of the cluster and troubleshoot any issues.

–

Checking Firewall and yaml/json Files for Kubernetes

When installing Kubernetes on RedHat Linux, it is crucial to check the firewall settings to ensure proper communication between nodes. Make sure to open the necessary ports for Kubernetes to function correctly. This can be done using firewall-cmd commands to allow traffic.

Additionally, it is important to review the yaml and json files used for Kubernetes configuration. These files dictate the behavior of your Kubernetes cluster, so it is essential to verify their accuracy and completeness. Look for any errors or misconfigurations that may cause issues during deployment.

Regularly auditing both firewall settings and configuration files is a good practice to ensure the smooth operation of your Kubernetes cluster. By maintaining a secure and properly configured environment, you can optimize the performance of your applications and services running on Kubernetes.

Additional Information and Conclusion

In conclusion, installing Kubernetes on RedHat Linux is a valuable skill that can enhance your understanding of container orchestration and management. By following the steps outlined in this guide, you can set up a powerful platform for deploying and managing your applications in a clustered environment.

Additional information on **Ansible** and **Docker** can further streamline the process of managing your Kubernetes installation. These tools can automate tasks and simplify the deployment of your web applications on your RedHat Linux server.

By gaining hands-on experience with Kubernetes, you will also develop a deeper understanding of how to scale your applications, manage resources efficiently, and ensure high availability for your services. This knowledge will be invaluable as you work with computer networks, databases, and other components of modern IT infrastructure.