Welcome to the gateway of Kubernetes, where we embark on a journey to unravel the fundamentals of this powerful container orchestration platform. In this tutorial, we will delve into the world of Kubernetes, catering specifically to beginners, providing a solid foundation to understand and navigate this innovative technology. So fasten your seatbelts, as we embark on this exciting voyage to conquer Kubernetes!

Introduction to Kubernetes

Kubernetes is an open-source platform that allows you to automate the deployment, scaling, and management of containerized applications. It is designed to simplify the management of complex applications in a distributed computing environment.

With Kubernetes, you can easily manage and scale your applications across multiple computers, whether they are physical machines or virtual machines. It provides a robust and flexible platform for running your applications in the cloud.

One of the key benefits of Kubernetes is its ability to handle the complexities of modern application development. It provides a declarative programming model, where you define the desired state of your application using YAML files. Kubernetes then takes care of managing the underlying infrastructure to ensure that your application is running as expected.

Kubernetes also provides a powerful set of APIs that allows you to interact with the platform programmatically. This means that you can automate tasks, such as deployment and scaling, using your favorite programming language.

In addition, Kubernetes offers features like load balancing, service discovery, and persistence, which are essential for running reliable and scalable applications. It also has built-in support for debugging and monitoring, making it easier to troubleshoot issues and optimize performance.

Nodes and Namespaces in Kubernetes

| Node | Description |

|---|---|

| Node | A worker machine in Kubernetes, responsible for running containers. |

| Node Name | A unique identifier for a node within the cluster. |

| Node Selector | A mechanism to schedule pods to specific nodes based on labels. |

| Node Affinity | A feature to ensure pods are scheduled to nodes that meet certain conditions or constraints. |

| Node Taints | A method to repel pods from being scheduled on specific nodes, unless the pods have matching tolerations. |

Managing Pods and ReplicaSets

Pods are the smallest and most basic units in the Kubernetes ecosystem. They encapsulate one or more containers, along with shared resources such as storage and networking. Pods can be thought of as a single instance of an application running on a node in the cluster.

ReplicaSets, on the other hand, are responsible for ensuring that a specified number of identical pods are running at all times. They are used to scale applications horizontally by creating multiple replicas of a pod.

To manage pods and ReplicaSets, you will need to use the Kubernetes command-line interface (CLI) or the Kubernetes API. This allows you to interact with the cluster and perform operations such as creating, updating, and deleting pods and ReplicaSets.

When managing pods, you can use YAML files to define their specifications, including the container image, resources, and environment variables. This declarative approach allows you to easily version and reproduce your pod configurations.

ReplicaSets can be managed by specifying the desired number of replicas in the YAML file or using the kubectl scale command. This makes it easy to scale your application up or down based on demand.

In addition to managing individual pods and ReplicaSets, Kubernetes provides powerful features for managing the overall health and availability of your applications. These include load balancing, service discovery, and automatic failover.

Deploying and Scaling Applications in Kubernetes

To deploy an application in Kubernetes, you need to create a deployment object that defines the desired state of your application. This includes specifying the container image, the number of replicas, and any resources or dependencies your application requires. Kubernetes will then create and manage the necessary pods to run your application.

Scaling applications in Kubernetes is straightforward. You can scale your application horizontally by increasing or decreasing the number of replicas. This allows you to handle increased traffic or scale down during periods of low demand. Kubernetes also supports automatic scaling based on resource usage, such as CPU or memory.

Kubernetes provides built-in load balancing to distribute traffic to your application across multiple pods. This ensures high availability and prevents any single pod from becoming a bottleneck. Additionally, Kubernetes allows you to expose your application to the outside world through services. Services provide a stable network endpoint and can be configured to load balance traffic to your application.

Debugging applications in Kubernetes can be done using various tools and techniques. You can use the Kubernetes dashboard or command-line interface to monitor the state of your application and troubleshoot any issues. Kubernetes also integrates with popular logging and monitoring tools, allowing you to gain insights into the performance and health of your applications.

To achieve high availability and fault tolerance, Kubernetes replicates your application across multiple nodes in a cluster. It automatically handles node failures by rescheduling pods on healthy nodes. This ensures that your application remains available even if individual nodes or pods fail.

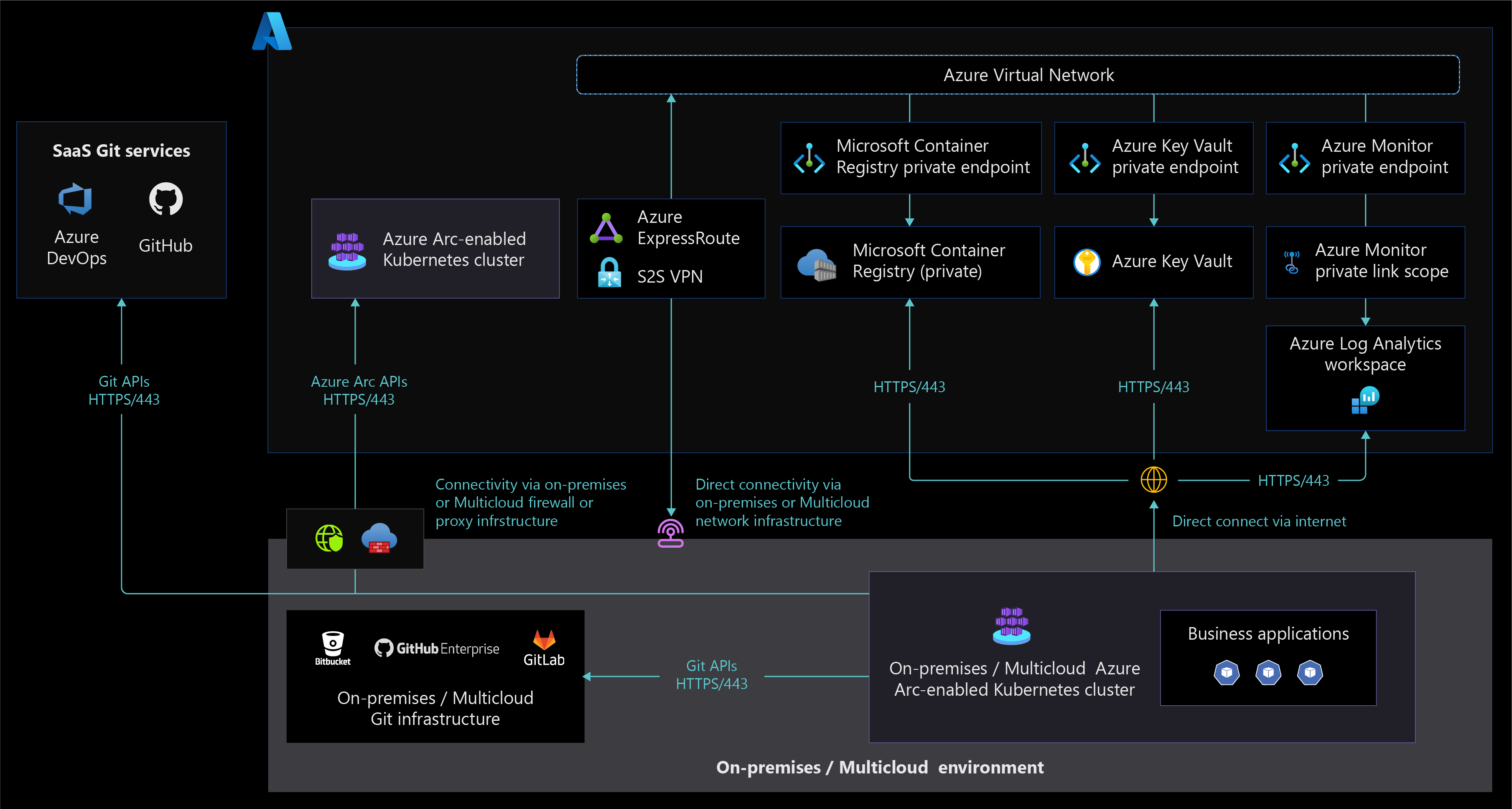

Kubernetes is designed to be cloud-agnostic and can run on various cloud providers, such as Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure. It also supports on-premises deployments, allowing you to run Kubernetes in your own data center or virtualization environment.

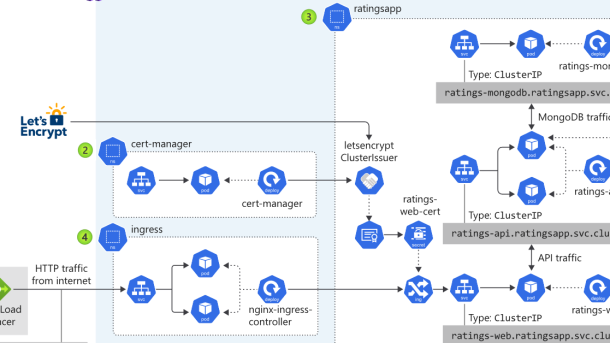

Services and Networking in Kubernetes

In Kubernetes, services play a crucial role in enabling communication between different components of an application. Services act as an abstraction layer that allows pods to interact with each other and with external resources. By defining a service, you can expose your application to the outside world and allow it to be accessed by other pods or services.

Networking in Kubernetes is handled by a component called kube-proxy. Kube-proxy is responsible for routing network traffic to the appropriate pods and services. It uses IPtables or IPVS to implement load balancing and service discovery.

When it comes to networking, Kubernetes offers different types of services. ClusterIP is the default service type and it provides internal access to the service within the cluster. NodePort allows you to expose the service on a specific port across all nodes in the cluster. LoadBalancer is used to expose the service externally using a cloud provider’s load balancer. Finally, there is ExternalName, which allows you to map a service to an external DNS name.

To create a service in Kubernetes, you need to define a YAML file that describes the desired state of the service. This includes the type of service, the ports it should listen on, and any selectors to identify the pods that should be part of the service.

Networking in Kubernetes can be a complex topic, but understanding the basics is essential for managing and deploying applications in a Kubernetes cluster. By mastering services and networking, you can ensure that your applications are accessible and can communicate with each other effectively.

Keep in mind that Kubernetes is just one piece of the puzzle when it comes to managing a cloud-native infrastructure. It is often used in conjunction with other tools and platforms such as Docker, OpenShift, and Google Cloud Platform. Having a solid understanding of these technologies will greatly enhance your Kubernetes skills and make you a valuable asset in the world of cloud computing.

So, if you’re a beginner looking to get started with Kubernetes, make sure to invest some time in learning about services and networking. It will open up a whole new world of possibilities and help you take your Linux training to the next level.

Managing Persistent Storage in Kubernetes

| Topic | Description |

|---|---|

| Persistent Volumes | A storage abstraction provided by Kubernetes to decouple storage from pods. Persistent Volumes (PVs) exist independently of pods and can be dynamically provisioned. |

| Persistent Volume Claims | A request for storage by a user or a pod. Persistent Volume Claims (PVCs) can be bound to a PV and provide an interface to access the underlying storage. |

| Storage Classes | A way to dynamically provision PVs based on predefined storage configurations. Storage Classes allow users to request storage without having to manually create PVs. |

| Volume Modes | Defines how a volume can be accessed by pods. There are three modes: Filesystem, Block, and Raw. Filesystem is the default mode, allowing read-write access to the volume. |

| Access Modes | Defines how a PV can be accessed by pods. There are three access modes: ReadWriteOnce, ReadOnlyMany, and ReadWriteMany. ReadWriteOnce allows read-write access by a single node, ReadOnlyMany allows read-only access by multiple nodes, and ReadWriteMany allows read-write access by multiple nodes. |

| Volume Snapshots | A way to create point-in-time snapshots of PVs. Volume snapshots can be used for data backup, migration, or cloning. |

| Subpath Mounts | A feature that allows mounting a subdirectory of a volume into a pod. Subpath mounts are useful when multiple containers within a pod need access to different directories within the same volume. |

Secrets and ConfigMaps in Kubernetes

In Kubernetes, Secrets and ConfigMaps are essential components for managing and storing sensitive information and configuration data.

Secrets are used to securely store sensitive data, such as passwords, API keys, and tokens. They are encrypted and can be accessed by authorized applications and services within the cluster.

ConfigMaps, on the other hand, store non-sensitive configuration data, such as environment variables, file paths, and command-line arguments. They provide a way to decouple configuration from application code and make it easier to manage and update configuration settings.

To create a Secret or ConfigMap in Kubernetes, you can use the command line interface (CLI) or define them in a YAML file. Once created, they can be referenced by pods or other resources in the cluster.

Secrets and ConfigMaps can be mounted as volumes in a pod, allowing applications to access the stored data as files. This enables applications to read configuration settings or use sensitive data during runtime.

It’s important to note that Secrets and ConfigMaps are not meant to be used for storing large amounts of data. For storing files or other types of data, it’s recommended to use cloud storage solutions or other external storage systems.

By using Secrets and ConfigMaps effectively, you can enhance the security and flexibility of your Kubernetes deployments. They provide a way to centralize and manage configuration data, making it easier to maintain and update applications running in your cluster.

Advanced Features and Conclusion

In this section, we will explore some of the advanced features of Kubernetes and provide a conclusion to our Kubernetes tutorial for beginners.

Kubernetes offers a wide range of advanced features that can enhance your experience with container orchestration. These features include load balancing, which helps distribute traffic evenly across your application software to prevent overload on a single server. Kubernetes also supports declarative programming, allowing you to define the desired state of your applications and let Kubernetes handle the complexity of managing them.

Another important feature of Kubernetes is its support for persistent storage. With cloud storage options like Amazon Web Services, Kubernetes can ensure that your application data is stored securely and accessible even in the event of downtime. This persistence is crucial for maintaining the state of your applications and ensuring a seamless user experience.

Kubernetes also provides advanced networking capabilities, allowing you to create complex computer networks within your cluster. You can define and manage network policies, control access to your services, and even create virtual private networks for added security. These networking features make Kubernetes a powerful tool for building scalable and secure applications.

In conclusion, Kubernetes is a powerful open-source software that simplifies the deployment and management of containerized applications. With its advanced features and support for various cloud computing platforms like Amazon Web Services and OpenShift, Kubernetes provides a robust and flexible platform for running your applications.

By mastering Kubernetes, you can take control of your containerized applications, streamline your development process, and improve the scalability and reliability of your software. Whether you are a beginner or an experienced developer, learning Kubernetes can greatly enhance your skills and open new opportunities in the world of cloud-native computing.

So, what are you waiting for? Dive into the world of Kubernetes and start your journey towards becoming a proficient container orchestrator!