Welcome to the ultimate beginner’s guide to Kubernetes! In this article, we will walk you through the basics of Kubernetes and help you grasp the fundamentals of container orchestration. Let’s dive in and demystify Kubernetes together.

Kubernetes Basics

– Kubernetes is an open-source platform designed to automate deploying, scaling, and operating application containers. It allows you to manage a *cluster* of Linux containers as a single system.

– To start using Kubernetes, you need to have a basic understanding of Linux and containerization. If you are new to these concepts, consider taking a Linux training course to get familiar with the fundamentals.

– In Kubernetes, you define how your application containers should run, scale, and interact with each other using *pods*, *services*, and other resources. This helps in orchestrating your containers effectively.

– By using Kubernetes, you can improve the reliability and scalability of your application, minimize downtime, and enhance the overall user experience. It simplifies the process of managing containerized applications in a cluster environment.

– As you delve deeper into Kubernetes, you will discover its powerful features for automating tasks, monitoring applications, and troubleshooting issues. With practice and experience, you can become proficient in using Kubernetes to optimize your containerized applications.

Key Concepts in Kubernetes

Kubernetes is an open-source container orchestration platform that allows you to automate the deployment, scaling, and management of containerized applications.

One of the key concepts in Kubernetes is the idea of **pods**. Pods are the smallest deployable units in Kubernetes and can contain one or more containers that share resources such as storage and networking.

Another important concept is **services**. Services in Kubernetes allow you to define a set of pods and how they should be accessed. This abstraction helps in load balancing, service discovery, and more.

**Deployments** are also crucial in Kubernetes. Deployments manage the lifecycle of pods and provide features such as rolling updates and rollbacks.

Understanding these key concepts will help you get started with Kubernetes and make the most out of this powerful tool for container orchestration.

By mastering these fundamental concepts, you will be able to deploy and manage your containerized applications efficiently and effectively.

So, dive into the world of Kubernetes and start exploring the endless possibilities it offers for managing your applications in a cloud-native environment.

Understanding Kubernetes Architecture

Kubernetes architecture is crucial to understand as it forms the foundation of how the platform operates.

At its core, Kubernetes consists of a master node and multiple worker nodes, which are all interconnected within a cluster. The master node manages the entire cluster and is responsible for scheduling tasks, while the worker nodes execute these tasks.

Pods are the smallest unit in Kubernetes and can contain one or more containers. These pods are scheduled onto worker nodes by the master node.

Understanding how Services work in Kubernetes is also essential. Services provide a consistent way to access applications running in the cluster, regardless of which worker node they are on.

Features and Benefits of Kubernetes

Kubernetes is an open-source platform that automates the management, scaling, and deployment of containerized applications. One of the key features of Kubernetes is its ability to orchestrate multiple containers across a computer cluster, ensuring that your application runs smoothly and efficiently.

Scalability is another benefit of Kubernetes, allowing you to easily scale your application up or down based on demand without any downtime. This ensures that your application can handle any amount of traffic without compromising performance.

With Kubernetes, you can also ensure that your application is always available and reliable. By automatically restarting failed containers and distributing traffic among healthy ones, Kubernetes helps minimize downtime and keep your application running smoothly.

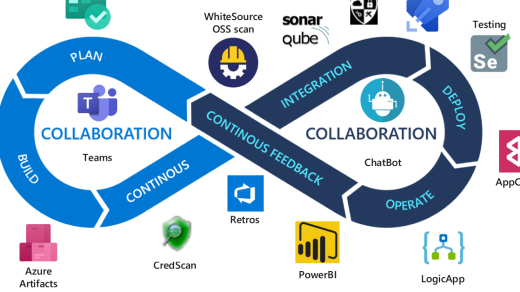

Another important feature of Kubernetes is its flexibility and modularity. It allows you to easily integrate with other tools and services, making it easier to manage and monitor your application. This makes Kubernetes an ideal choice for developers looking to streamline their development process and improve overall efficiency.

Working with Nodes and Pods

When working with **Nodes** and **Pods** in Kubernetes, it’s important to understand the role each plays in the cluster. **Nodes** are individual machines that make up the cluster, while **Pods** are the smallest deployable units that can run multiple containers.

Nodes can be physical servers or virtual machines, and they are responsible for running applications and services. Pods, on the other hand, provide an isolated environment for containers to run within a Node.

To work effectively with Nodes and Pods, it’s crucial to have a good understanding of how they interact within the Kubernetes ecosystem. This includes deploying Pods to Nodes, managing resources, and monitoring their performance.

By mastering the concepts of Nodes and Pods, you’ll be able to optimize your Kubernetes cluster for better efficiency and scalability. This knowledge is essential for anyone looking to work with containerized applications in a production environment.

In the next section of this tutorial, we’ll dive deeper into the specifics of managing Nodes and Pods in a Kubernetes cluster. Stay tuned for more practical tips and insights on getting started with Kubernetes.

Managing Deployments and Services

Deployments are used to define the desired state of a pod or a set of pods and manage their lifecycle. Services provide a way to access and communicate with pods, allowing for load balancing and service discovery within a cluster. Namespaces help in organizing and isolating resources within a Kubernetes cluster.

To manage deployments and services effectively, it is crucial to use **orchestration** tools provided by Kubernetes. These tools help in automating the deployment, scaling, and management of containerized applications. Additionally, using **containerization** techniques like Docker can simplify the process of packaging and deploying applications.

Debugging and troubleshooting are essential skills when working with deployments and services in Kubernetes. Understanding how to monitor and log applications, as well as diagnose issues, can help in maintaining a healthy and efficient cluster.

By mastering the fundamentals of managing deployments and services in Kubernetes, you can streamline the development and deployment process of **application software** in a **Linux Foundation** environment.

Using Volumes, Secrets, and ConfigMaps

When working with Kubernetes, understanding how to use Volumes, Secrets, and ConfigMaps is crucial for managing data within your cluster.

Volumes provide a way to store and access data in a container, allowing for persistence even if a container gets terminated. This is essential for applications that require data to be stored beyond the lifespan of a single container.

Secrets allow you to store sensitive information, such as passwords or API keys, securely within your cluster. By using Secrets, you can keep this information out of your application code and reduce the risk of exposing it.

ConfigMaps are used to store non-sensitive configuration data that can be consumed by your application. This helps to keep your configuration separate from your code, making it easier to manage and update.

By mastering Volumes, Secrets, and ConfigMaps in Kubernetes, you can effectively manage your application’s data and configuration, leading to a more reliable and secure deployment. This knowledge is essential for anyone looking to dive deeper into Linux training and orchestration in a computer cluster setting.

Advanced Kubernetes Functions

– These functions include features such as auto-scaling, **load balancing**, and **rolling updates**.

– By mastering these advanced functions, users can effectively manage large-scale container deployments with ease.

– Understanding pod affinity and anti-affinity can help optimize resource allocation within a Kubernetes cluster.

– Utilizing advanced networking features like **network policies** can enhance security and performance within a Kubernetes environment.

– Deepening your knowledge of **persistent volumes** and **storage classes** can help ensure data persistence and availability in your Kubernetes applications.

– Advanced troubleshooting techniques, such as **logging** and **monitoring**, are essential for identifying and resolving issues in a Kubernetes deployment.

– Learning how to use **Custom Resource Definitions (CRDs)** can enable users to extend Kubernetes functionality to meet specific application requirements.

– Mastering these advanced Kubernetes functions will elevate your container orchestration skills and empower you to efficiently manage complex applications in a Linux environment.

FAQ for Kubernetes Beginners

– What is Kubernetes?

Kubernetes is an open-source platform designed to automate the deployment, scaling, and management of containerized applications.

– How is Kubernetes different from Docker?

While Docker is a containerization platform, Kubernetes is a container orchestration tool that helps manage multiple containers across multiple hosts.

– How can I get started with Kubernetes?

To begin learning Kubernetes, I recommend taking a Linux training course to familiarize yourself with the operating system that Kubernetes is built on.

– Are there any prerequisites for learning Kubernetes?

Having a basic understanding of containerization and Linux operating systems will be beneficial when starting your Kubernetes journey.

– Where can I find more resources for learning Kubernetes?

The Linux Foundation offers a variety of courses and certifications related to Kubernetes, as well as online tutorials and documentation provided by Google.

– Are there any best practices for using Kubernetes?

It is essential to regularly update your Kubernetes cluster and monitor its performance to ensure optimal functionality.

– How can I troubleshoot issues in Kubernetes?

Utilize Kubernetes’ built-in logging and monitoring tools to identify and resolve any problems that may arise within your cluster.